Introduction

Overview

Teaching: 15 min

Exercises: 3 minQuestions

What are basic principles for using spreadsheets for good data organization?

Objectives

Describe best practices for organizing data so computers can make the best use of data sets.

Good data organization is the foundation of your research project. Most researchers have data or do data entry in spreadsheets. Spreadsheet programs are very useful graphical interfaces for designing data tables and handling very basic data quality control functions.

Spreadsheet outline

After this lesson, you will be able to:

- Implement best practices in data table formatting

- Identify and address common formatting mistakes

- Understand approaches for handling dates in spreadsheets

- Utilize basic quality control features and data manipulation practices

- Effectively export data from spreadsheet programs

Overall good data practices

Spreadsheets are good for data entry. Therefore we have a lot of data in spreadsheets. Much of your time as a researcher will be spent in this ‘data wrangling’ stage. It’s not the most fun, but it’s necessary. We’ll teach you how to think about data organization and some practices for more effective data wrangling.

What this lesson will not teach you

- How to do statistics in a spreadsheet

- How to do plotting in a spreadsheet

- How to write code in spreadsheet programs

If you’re looking to do this, a good reference is Head First Excel, published by O’Reilly.

Why aren’t we teaching data analysis in spreadsheets

-

Data analysis in spreadsheets usually requires a lot of manual work. If you want to change a parameter or run an analysis with a new dataset, you usually have to redo everything by hand. (We do know that you can create macros, but see the next point.)

-

It is also difficult to track or reproduce statistical or plotting analyses done in spreadsheet programs when you want to go back to your work or someone asks for details of your analysis.

Spreadsheet programs

Many spreadsheet programs are available. Since most participants utilize Excel as their primary spreadsheet program, this lesson will make use of Excel examples.

A free spreadsheet program that can also be used is LibreOffice.

Commands may differ a bit between programs, but the general idea is the same.

Exercise

- How many people have used spreadsheets in their research?

- How many people have accidentally done something that made them frustrated or sad?

Spreadsheets encompass a lot of the things we need to be able to do as researchers. We can use them for:

- Data entry

- Organizing data

- Subsetting and sorting data

- Statistics

- Plotting

We do a lot of different operations in spreadsheets. What kind of operations do you do in spreadsheets? Which ones do you think spreadsheets are good for?

Problems with Spreadsheets

Spreadsheets are good for data entry, but in reality we tend to use spreadsheet programs for much more than data entry. We use them to create data tables for publications, to generate summary statistics, and make figures.

Generating tables for publications in a spreadsheet is not optimal - often, when formatting a data table for publication, we’re reporting key summary statistics in a way that is not really meant to be read as data, and often involves special formatting (merging cells, creating borders, making it pretty). We advise you to do this sort of operation within your document editing software.

The latter two applications, generating statistics and figures, should be used with caution: because of the graphical, drag and drop nature of spreadsheet programs, it can be very difficult, if not impossible, to replicate your steps (much less retrace anyone else’s), particularly if your stats or figures require you to do more complex calculations. Furthermore, in doing calculations in a spreadsheet, it’s easy to accidentally apply a slightly different formula to multiple adjacent cells. When using a command-line based statistics program like R or SAS, it’s practically impossible to apply a calculation to one observation in your dataset but not another unless you’re doing it on purpose.

Using Spreadsheets for Data Entry and Cleaning

However, there are circumstances where you might want to use a spreadsheet program to produce “quick and dirty” calculations or figures, and data cleaning will help you use some of these features. Data cleaning also puts your data in a better format prior to importation into a statistical analysis program. We will show you how to use some features of spreadsheet programs to check your data quality along the way and produce preliminary summary statistics.

In this lesson, we will assume that you are most likely using Excel as your primary spreadsheet program - there are others (gnumeric, Calc from OpenOffice), and their functionality is similar, but Excel seems to be the program most used by biologists and ecologists.

In this lesson we’re going to talk about:

- Formatting data tables in spreadsheets

- Formatting problems

- Dates as data

- Quality control

- Exporting data

Key Points

Good data organization is the foundation of any research project.

Formatting data tables in Spreadsheets

Overview

Teaching: 15 min

Exercises: 20 minQuestions

How do we format data in spreadsheets for effective data use?

Objectives

Describe best practices for data entry and formatting in spreadsheets.

Apply best practices to arrange variables and observations in a spreadsheet.

The most common mistake made is treating spreadsheet programs like lab notebooks, that is, relying on context, notes in the margin, spatial layout of data and fields to convey information. As humans, we can (usually) interpret these things, but computers don’t view information the same way, and unless we explain to the computer what every single thing means (and that can be hard!), it will not be able to see how our data fits together.

Using the power of computers, we can manage and analyze data in much more effective and faster ways, but to use that power, we have to set up our data for the computer to be able to understand it (and computers are very literal).

This is why it’s extremely important to set up well-formatted tables from the outset - before you even start entering data from your very first preliminary experiment. Data organization is the foundation of your research project. It can make it easier or harder to work with your data throughout your analysis, so it’s worth thinking about when you’re doing your data entry or setting up your experiment. You can set things up in different ways in spreadsheets, but some of these choices can limit your ability to work with the data in other programs or have the you-of-6-months-from-now or your collaborator work with the data.

Note: the best layouts/formats (as well as software and interfaces) for data entry and data analysis might be different. It is important to take this into account, and ideally automate the conversion from one to another.

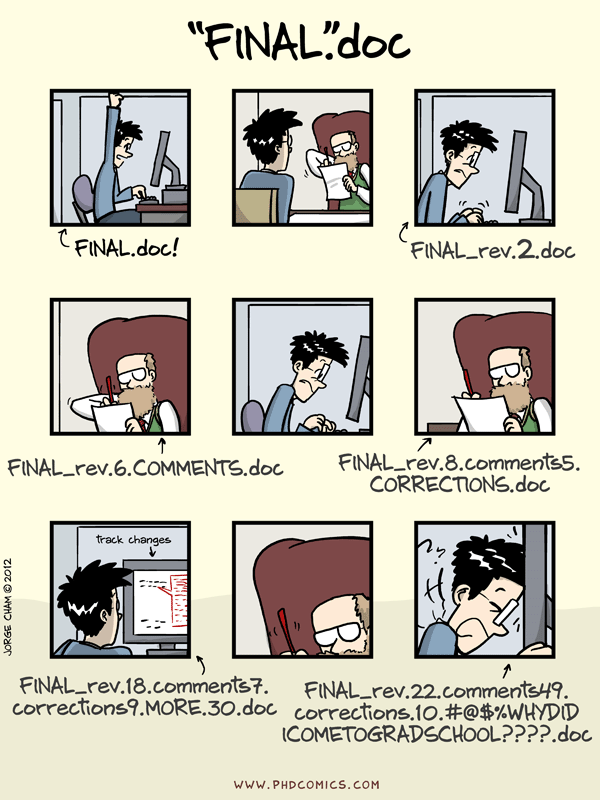

Keeping track of your analyses

When you’re working with spreadsheets, during data clean up or analyses, it’s very easy to end up with a spreadsheet that looks very different from the one you started with. In order to be able to reproduce your analyses or figure out what you did when Reviewer #3 asks for a different analysis, you should

- create a new file with your cleaned or analyzed data. Don’t modify the original dataset, or you will never know where you started!

- keep track of the steps you took in your clean up or analysis. You should track these steps as you would any step in an experiment. We recommend that you do this in a plain text file stored in the same folder as the data file.

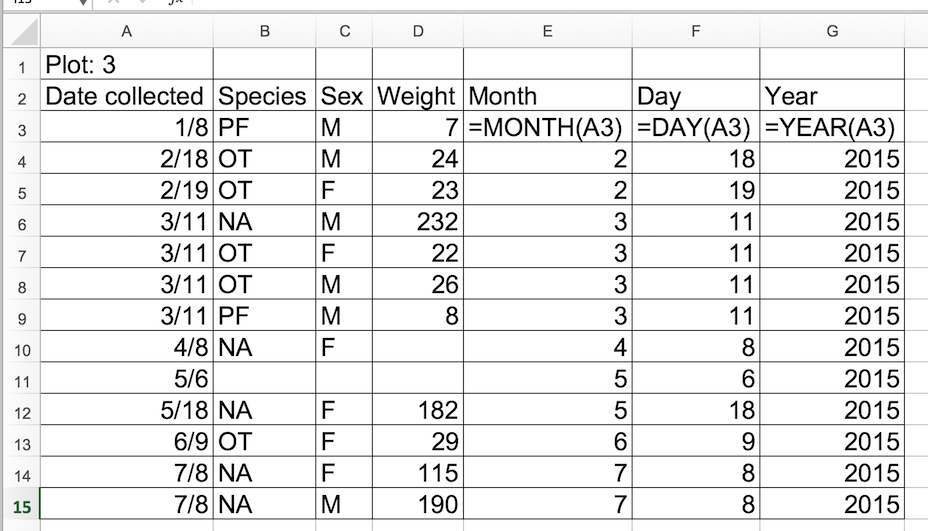

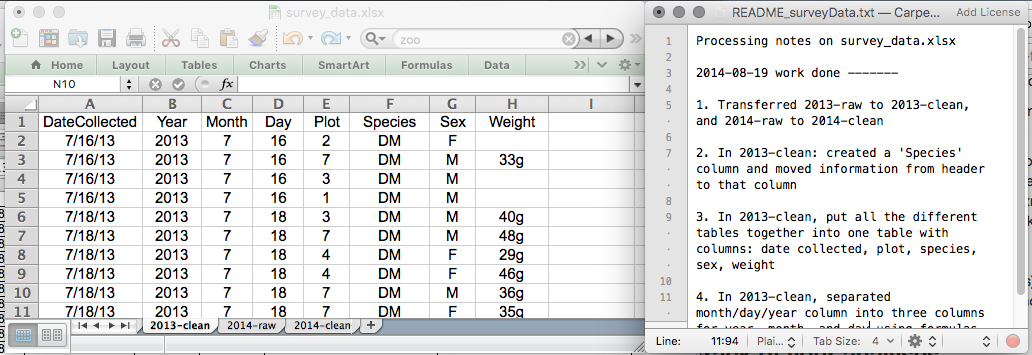

This might be an example of a spreadsheet setup:

Put these principles in to practice today during your Exercises.

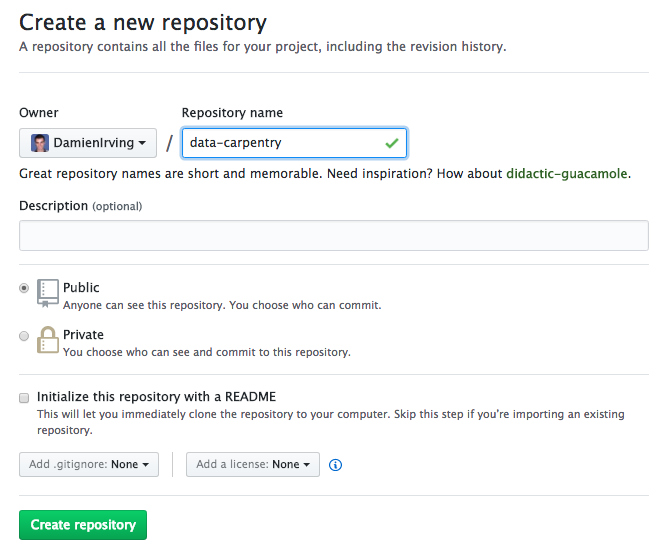

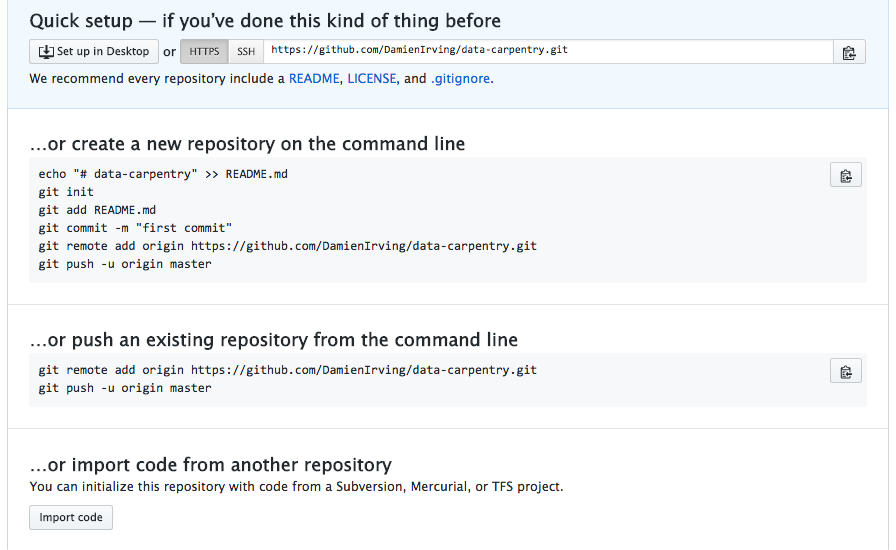

Note: This is out of scope for this lesson, but for information on how to maintain version control over your data, look at our lesson on ‘Git’.

Structuring data in spreadsheets

The cardinal rule of using spreadsheet programs for data is to keep it “tidy”:

- Put all your variables in columns - the thing you’re measuring, like ‘weight’ or ‘temperature’.

- Put each observation in its own row.

- Don’t combine multiple pieces of information in one cell. Sometimes it just seems like one thing, but think if that’s the only way you’ll want to be able to use or sort that data.

- Leave the raw data raw - don’t change it!

- Export the cleaned data to a text-based format like CSV (comma-separated values) format. This ensures that anyone can use the data, and is required by most data repositories.

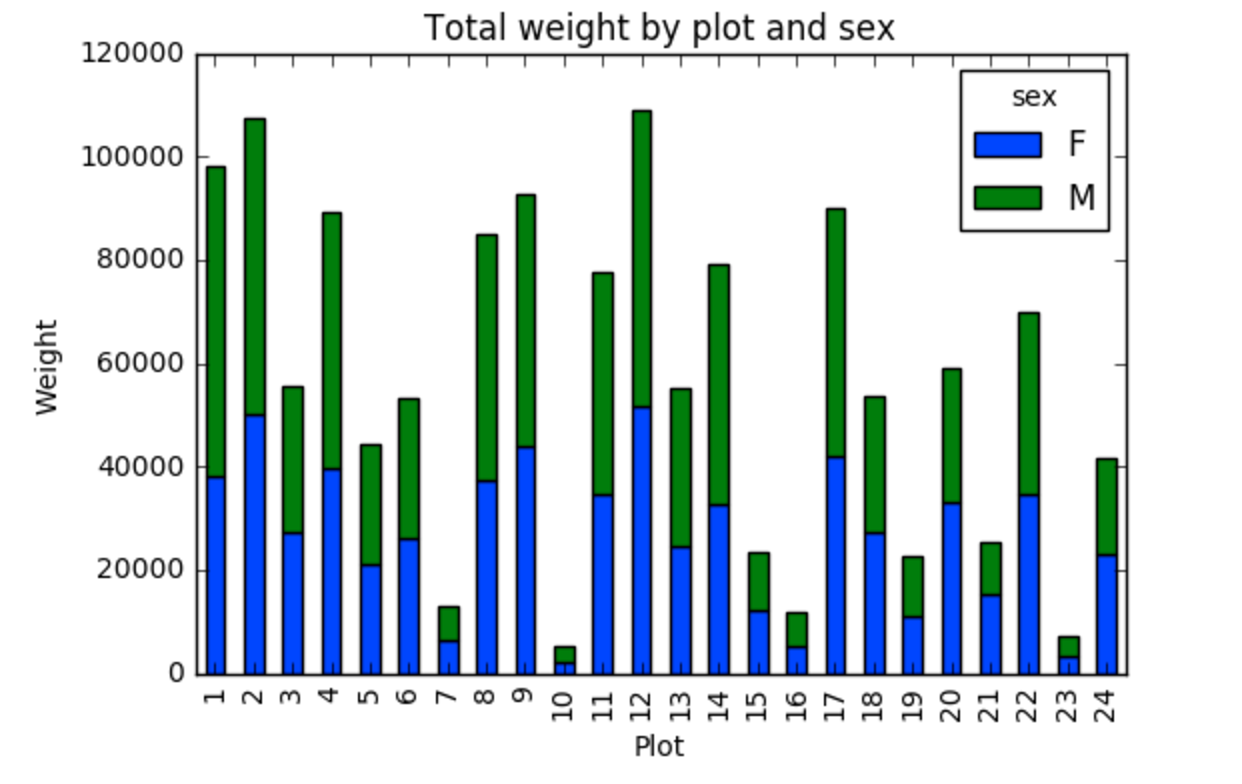

For instance, we have data from a survey of small mammals in a desert ecosystem. Different people have gone to the field and entered data into a spreadsheet. They keep track of things like species, plot, weight, sex and date collected.

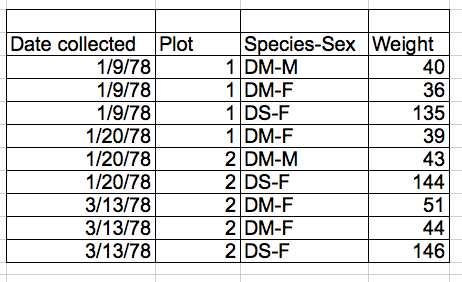

If they were to keep track of the data like this:

the problem is that species and sex are in the same field. So, if they wanted to look at all of one species or look at different weight distributions by sex, it would be hard to do this using this data setup. If instead we put sex and species in different columns, you can see that it would be much easier.

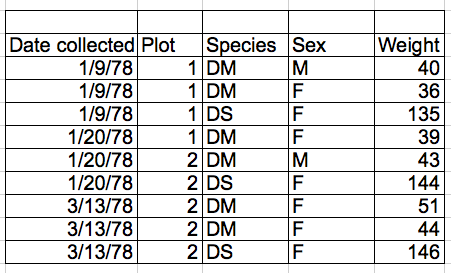

Columns for variables and rows for observations

The rule of thumb, when setting up a datasheet, is columns = variables, rows = observations, cells = data (values).

So, instead we should have:

Discussion

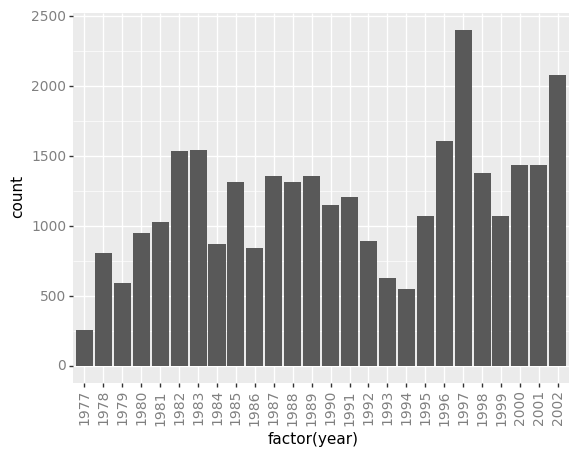

If not already discussed, introduce the dataset that will be used in this lesson, and in the other ecology lessons, the Portal Project Teaching Dataset.

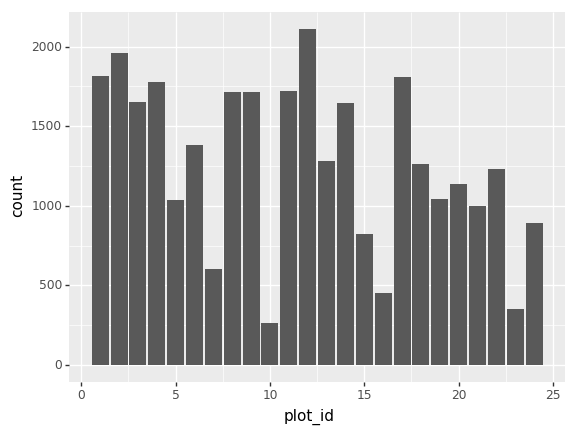

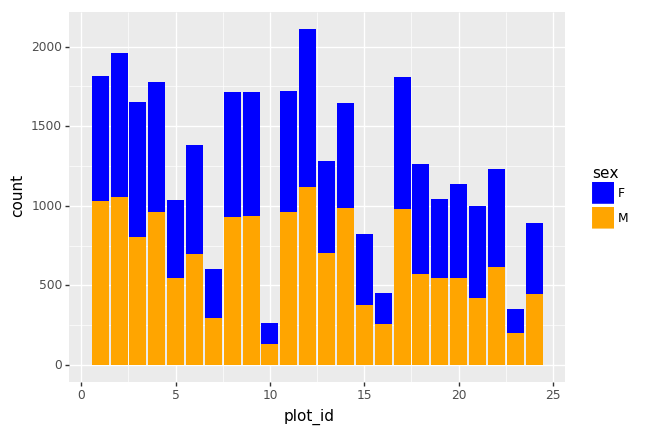

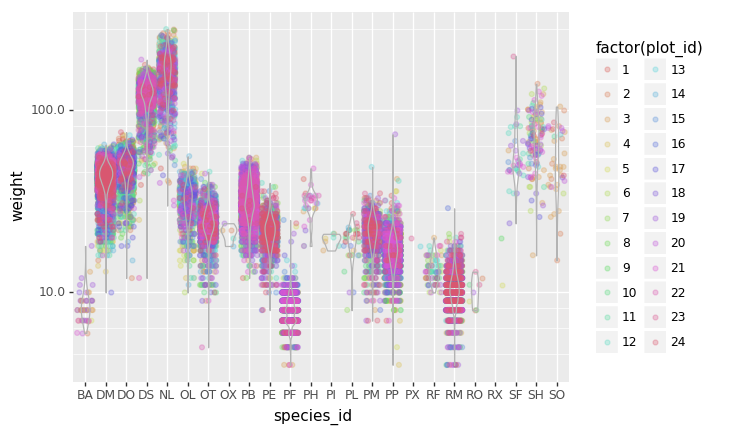

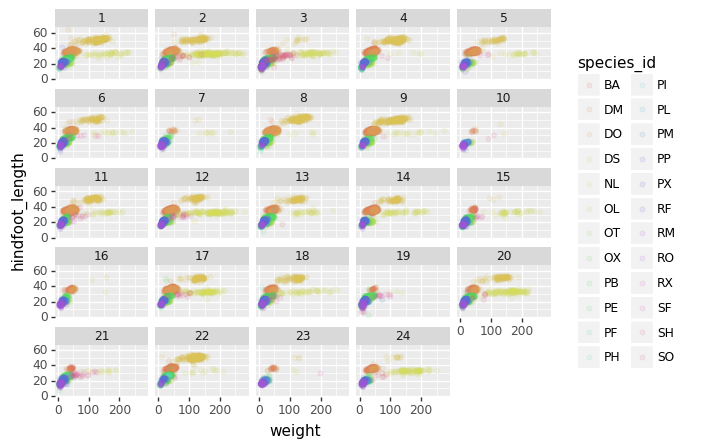

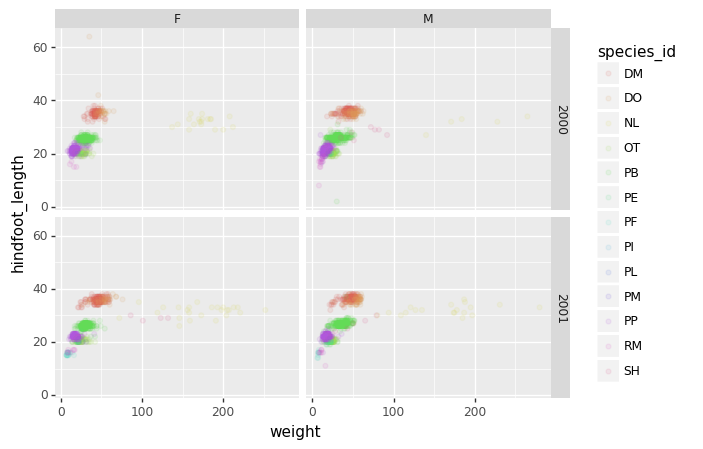

The data used in the ecology lessons are observations of a small mammal community in southern Arizona. This is part of a project studying the effects of rodents and ants on the plant community that has been running for almost 40 years. The rodents are sampled on a series of 24 plots, with different experimental manipulations controlling which rodents are allowed to access which plots.

This is a real dataset that has been used in over 100 publications. We’ve simplified it just a little bit for the workshop, but you can download the full dataset and work with it using exactly the same tools we’ll learn about today.

Exercise

We’re going to take a messy version of the survey data and describe how we would clean it up.

- Download the data by clicking here to get it from FigShare.

- Open up the data in a spreadsheet program.

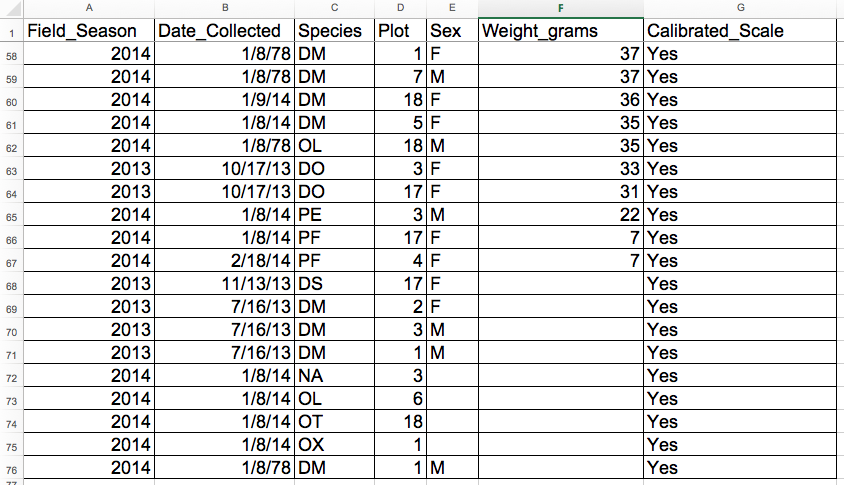

- You can see that there are two tabs. Two field assistants conducted the surveys, one in 2013 and one in 2014, and they both kept track of the data in their own way in tabs

2013and2014of the dataset, respectively. Now you’re the person in charge of this project and you want to be able to start analyzing the data.- With the person next to you, identify what is wrong with this spreadsheet. Also discuss the steps you would need to take to clean up the

2013and2014tabs, and to put them all together in one spreadsheet.Important Do not forget our first piece of advice: to create a new file (or tab) for the cleaned data, never modify your original (raw) data.

After you go through this exercise, we’ll discuss as a group what was wrong with this data and how you would fix it.

Solution

- Take about 10 minutes to work on this exercise.

- All the mistakes in 02-common-mistakes are present in the messy dataset. If the exercise is done during a workshop, ask people what they saw as wrong with the data. As they bring up different points, you can refer to 02-common-mistakes or expand a bit on the point they brought up.

- Note that there is a problem with dates in table ‘plot 3’ in

2014tab. The field assistant who collected the data for year 2014 initially forgot to include their data for ‘plot 3’. They came back in 2015 to include the missing data and entered the dates for ‘plot 3’ in the dataset without the year. Excel automatically filled in the missing year as the current year (i.e. 2015) - introducing an error in the data without the field assistant realising. If you get a response from the participants that they’ve spotted and fixed the problem with date, you can say you’ll come back to dates again towards the end of lesson in episode 03-dates-as-data. If participants have not spotted the problem with dates in ‘plot 3’ table, that’s fine as you will address peculiarities of working with dates in spreadsheets in episode 03-dates-as-data.

An excellent reference, in particular with regard to R scripting is

Hadley Wickham, Tidy Data, Vol. 59, Issue 10, Sep 2014, Journal of Statistical Software. http://www.jstatsoft.org/v59/i10.

Key Points

Never modify your raw data. Always make a copy before making any changes.

Keep track of all of the steps you take to clean your data in a plain text file.

Organize your data according to tidy data principles.

Formatting problems

Overview

Teaching: 20 min

Exercises: 0 minQuestions

What are some common challenges with formatting data in spreadsheets and how can we avoid them?

Objectives

Recognize and resolve common spreadsheet formatting problems.

Common Spreadsheet Errors

This lesson is meant to be used as a reference for discussion as learners identify issues with the messy dataset discussed in the previous lesson. Instructors: don’t go through this lesson except to refer to responses to the exercise in the previous lesson.

There are a few potential errors to be on the lookout for in your own data as well as data from collaborators or the Internet. If you are aware of the errors and the possible negative effect on downstream data analysis and result interpretation, it might motivate yourself and your project members to try and avoid them. Making small changes to the way you format your data in spreadsheets can have a great impact on efficiency and reliability when it comes to data cleaning and analysis.

- Using multiple tables

- Using multiple tabs

- Not filling in zeros

- Using problematic null values

- Using formatting to convey information

- Using formatting to make the data sheet look pretty

- Placing comments or units in cells

- Entering more than one piece of information in a cell

- Using problematic field names

- Using special characters in data

- Inclusion of metadata in data table

- Date formatting

Using multiple tables

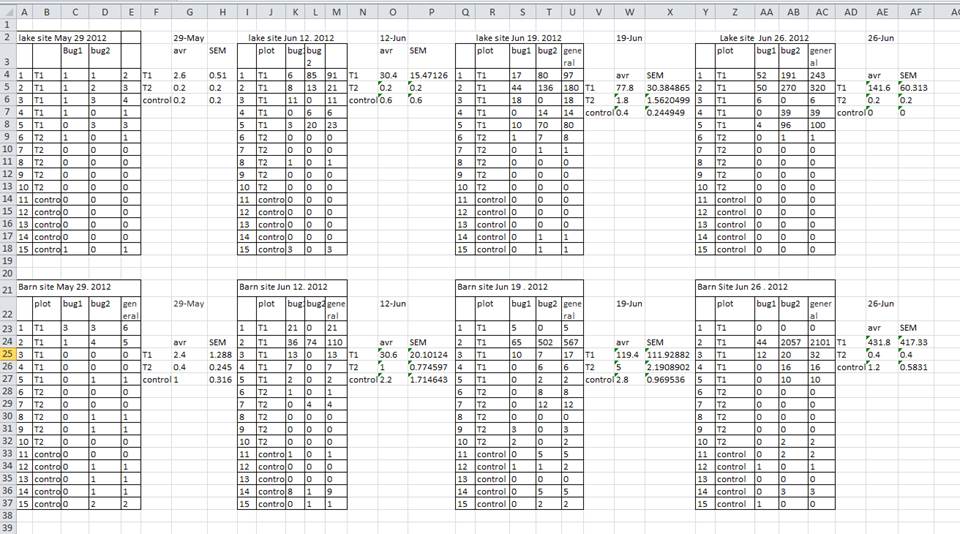

A common strategy is creating multiple data tables within one spreadsheet. This confuses the computer, so don’t do this! When you create multiple tables within one spreadsheet, you’re drawing false associations between things for the computer, which sees each row as an observation. You’re also potentially using the same field name in multiple places, which will make it harder to clean your data up into a usable form. The example below depicts the problem:

In the example above, the computer will see (for example) row 4 and assume that all columns A-AF refer to the same sample. This row actually represents four distinct samples (sample 1 for each of four different collection dates - May 29th, June 12th, June 19th, and June 26th), as well as some calculated summary statistics (an average (avr) and standard error of measurement (SEM)) for two of those samples. Other rows are similarly problematic.

Using multiple tabs

But what about workbook tabs? That seems like an easy way to organize data, right? Well, yes and no. When you create extra tabs, you fail to allow the computer to see connections in the data that are there (you have to introduce spreadsheet application-specific functions or scripting to ensure this connection). Say, for instance, you make a separate tab for each day you take a measurement.

This isn’t good practice for two reasons: 1) you are more likely to accidentally add inconsistencies to your data if each time you take a measurement, you start recording data in a new tab, and 2) even if you manage to prevent all inconsistencies from creeping in, you will add an extra step for yourself before you analyze the data because you will have to combine these data into a single datatable. You will have to explicitly tell the computer how to combine tabs - and if the tabs are inconsistently formatted, you might even have to do it manually.

The next time you’re entering data, and you go to create another tab or table, ask yourself if you could avoid adding this tab by adding another column to your original spreadsheet. We used multiple tabs in our example of a messy data file, but now you’ve seen how you can reorganize your data to consolidate across tabs.

Your data sheet might get very long over the course of the experiment. This makes it harder to enter data if you can’t see your headers at the top of the spreadsheet. But don’t repeat your header row. These can easily get mixed into the data, leading to problems down the road.

Instead you can freeze the column headers so that they remain visible even when you have a spreadsheet with many rows.

Documentation on how to freeze column headers in MS Excel

Not filling in zeros

It might be that when you’re measuring something, it’s usually a zero, say the number of times a rabbit is observed in the survey. Why bother writing in the number zero in that column, when it’s mostly zeros?

However, there’s a difference between a zero and a blank cell in a spreadsheet. To the computer, a zero is actually data. You measured or counted it. A blank cell means that it wasn’t measured and the computer will interpret it as an unknown value (otherwise known as a null value).

The spreadsheets or statistical programs will likely mis-interpret blank cells that you intend to be zeros. By not entering the value of your observation, you are telling your computer to represent that data as unknown or missing (null). This can cause problems with subsequent calculations or analyses. For example, the average of a set of numbers which includes a single null value is always null (because the computer can’t guess the value of the missing observations). Because of this, it’s very important to record zeros as zeros and truly missing data as nulls.

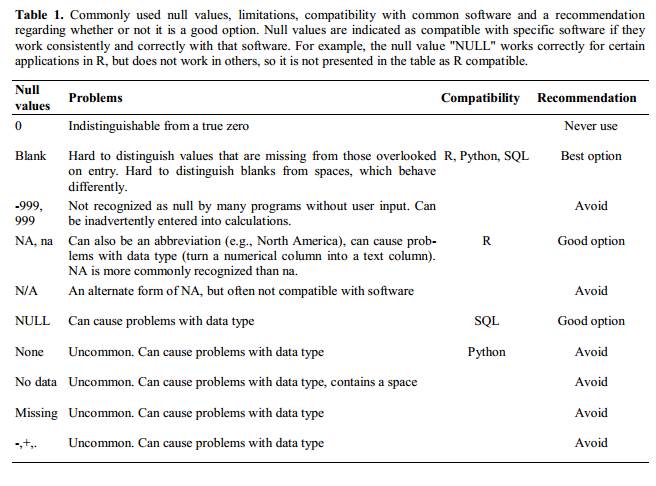

Using problematic null values

Example: using -999 or other numerical values (or zero) to represent missing data.

Solutions:

There are a few reasons why null values get represented differently within a dataset. Sometimes confusing null values are automatically recorded from the measuring device. If that’s the case, there’s not much you can do, but it can be addressed in data cleaning with a tool like OpenRefine before analysis. Other times different null values are used to convey different reasons why the data isn’t there. This is important information to capture, but is in effect using one column to capture two pieces of information. Like for using formatting to convey information it would be good here to create a new column like ‘data_missing’ and use that column to capture the different reasons.

Whatever the reason, it’s a problem if unknown or missing data is recorded as -999, 999, or 0. Many statistical programs will not recognize that these are intended to represent missing (null) values. How these values are interpreted will depend on the software you use to analyze your data. It is essential to use a clearly defined and consistent null indicator. Blanks (most applications) and NA (for R) are good choices. White et al, 2013, explain good choices for indicating null values for different software applications in their article: Nine simple ways to make it easier to (re)use your data. Ideas in Ecology and Evolution.

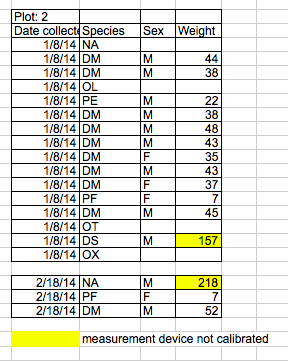

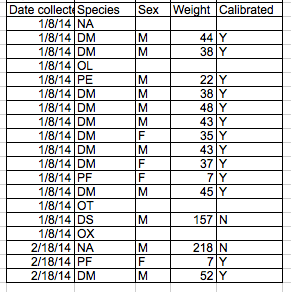

Using formatting to convey information

Example: highlighting cells, rows or columns that should be excluded from an analysis, leaving blank rows to indicate separations in data.

Solution: create a new field to encode which data should be excluded.

Using formatting to make the data sheet look pretty

Example: merging cells.

Solution: If you’re not careful, formatting a worksheet to be more aesthetically pleasing can compromise your computer’s ability to see associations in the data. Merged cells will make your data unreadable by statistics software. Consider restructuring your data in such a way that you will not need to merge cells to organize your data.

Placing comments or units in cells

Example: Your data was collected, in part, by a summer student who you later found out was mis-identifying some of your species, some of the time. You want a way to note these data are suspect.

Solution: Most analysis software can’t see Excel or LibreOffice comments, and would be confused by comments placed within your data cells. As described above for formatting, create another field if you need to add notes to cells. Similarly, don’t include units in cells: ideally, all the measurements you place in one column should be in the same unit, but if for some reason they aren’t, create another field and specify the units the cell is in.

Entering more than one piece of information in a cell

Example: You find one male, and one female of the same species. You enter this as 1M, 1F.

Solution: Don’t include more than one piece of information in a cell. This will limit the ways in which you can analyze your data. If you need both these measurements, design your data sheet to include this information. For example, include one column for number of individuals and a separate column for sex.

Using problematic field names

Choose descriptive field names, but be careful not to include spaces, numbers, or special characters of any kind. Spaces can be misinterpreted by parsers that use whitespace as delimiters and some programs don’t like field names that are text strings that start with numbers.

Underscores (_) are a good alternative to spaces. Consider writing names in camel case (like this: ExampleFileName) to improve

readability. Remember that abbreviations that make sense at the moment may not be so obvious in 6 months, but don’t overdo it with names

that are excessively long. Including the units in the field names avoids confusion and enables others to readily interpret your fields.

Examples

| Good Name | Good Alternative | Avoid |

| Max_temp_C | MaxTemp | Maximum Temp (°C) |

| Precipitation_mm | Precipitation | precmm |

| Mean_year_growth | MeanYearGrowth | Mean growth/year |

| sex | sex | M/F |

| weight | weight | w. |

| cell_type | CellType | Cell Type |

| Observation_01 | first_observation | 1st Obs |

Using special characters in data

Example: You treat your spreadsheet program as a word processor when writing notes, for example copying data directly from Word or other applications.

Solution: This is a common strategy. For example, when writing longer text in a cell, people often include line breaks, em-dashes, etc in their spreadsheet. Also, when copying data in from applications such as Word, formatting and fancy non-standard characters (such as left- and right-aligned quotation marks) are included. When exporting this data into a coding/statistical environment or into a relational database, dangerous things may occur, such as lines being cut in half and encoding errors being thrown.

General best practice is to avoid adding characters such as newlines, tabs, and vertical tabs. In other words, treat a text cell as if it were a simple web form that can only contain text and spaces.

Inclusion of metadata in data table

Example: You add a legend at the top or bottom of your data table explaining column meaning, units, exceptions, etc.

Solution: Recording data about your data (“metadata”) is essential. You may be on intimate terms with your dataset while you are collecting and analysing it, but the chances that you will still remember that the variable “sglmemgp” means single member of group, for example, or the exact algorithm you used to transform a variable or create a derived one, after a few months, a year, or more are slim.

As well, there are many reasons other people may want to examine or use your data - to understand your findings, to verify your findings, to review your submitted publication, to replicate your results, to design a similar study, or even to archive your data for access and re-use by others. While digital data by definition are machine-readable, understanding their meaning is a job for human beings. The importance of documenting your data during the collection and analysis phase of your research cannot be overestimated, especially if your research is going to be part of the scholarly record.

However, metadata should not be contained in the data file itself. Unlike a table in a paper or a supplemental file, metadata (in the form of legends) should not be included in a data file since this information is not data, and including it can disrupt how computer programs interpret your data file. Rather, metadata should be stored as a separate file in the same directory as your data file, preferably in plain text format with a name that clearly associates it with your data file. Because metadata files are free text format, they also allow you to encode comments, units, information about how null values are encoded, etc. that are important to document but can disrupt the formatting of your data file.

Additionally, file or database level metadata describes how files that make up the dataset relate to each other; what format are they are in; and whether they supercede or are superceded by previous files. A folder-level readme.txt file is the classic way of accounting for all the files and folders in a project.

(Text on metadata adapted from the online course Research Data MANTRA by EDINA and Data Library, University of Edinburgh. MANTRA is licensed under a Creative Commons Attribution 4.0 International License.)

Key Points

Avoid using multiple tables within one spreadsheet.

Avoid spreading data across multiple tabs.

Record zeros as zeros.

Use an appropriate null value to record missing data.

Don’t use formatting to convey information or to make your spreadsheet look pretty.

Place comments in a separate column.

Record units in column headers.

Include only one piece of information in a cell.

Avoid spaces, numbers and special characters in column headers.

Avoid special characters in your data.

Record metadata in a separate plain text file.

Dates as data

Overview

Teaching: 10 min

Exercises: 3 minQuestions

What are good approaches for handling dates in spreadsheets?

Objectives

Describe how dates are stored and formatted in spreadsheets.

Describe the advantages of alternative date formatting in spreadsheets.

Demonstrate best practices for entering dates in spreadsheets.

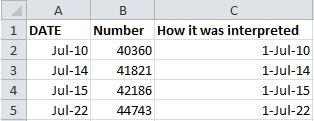

Dates in spreadsheets are stored in a single column. While this seems the most natural way to record dates, it actually is not best practice. A spreadsheet application will display the dates in a seemingly correct way (to a human observer) but how it actually handles and stores the dates may be problematic.

In particular, please remember that functions that are valid for a given spreadsheet program (be it LibreOffice, Microsoft Excel, OpenOffice, Gnumeric, etc.) are usually guaranteed to be compatible only within the same family of products. If you will later need to export the data and need to conserve the timestamps, you are better off handling them using one of the solutions discussed below.

Additionally, Excel can turn things that aren’t dates into dates, for example names or identifiers like MAR1, DEC1, OCT4. So if you’re avoiding the date format overall, it’s easier to identify these issues.

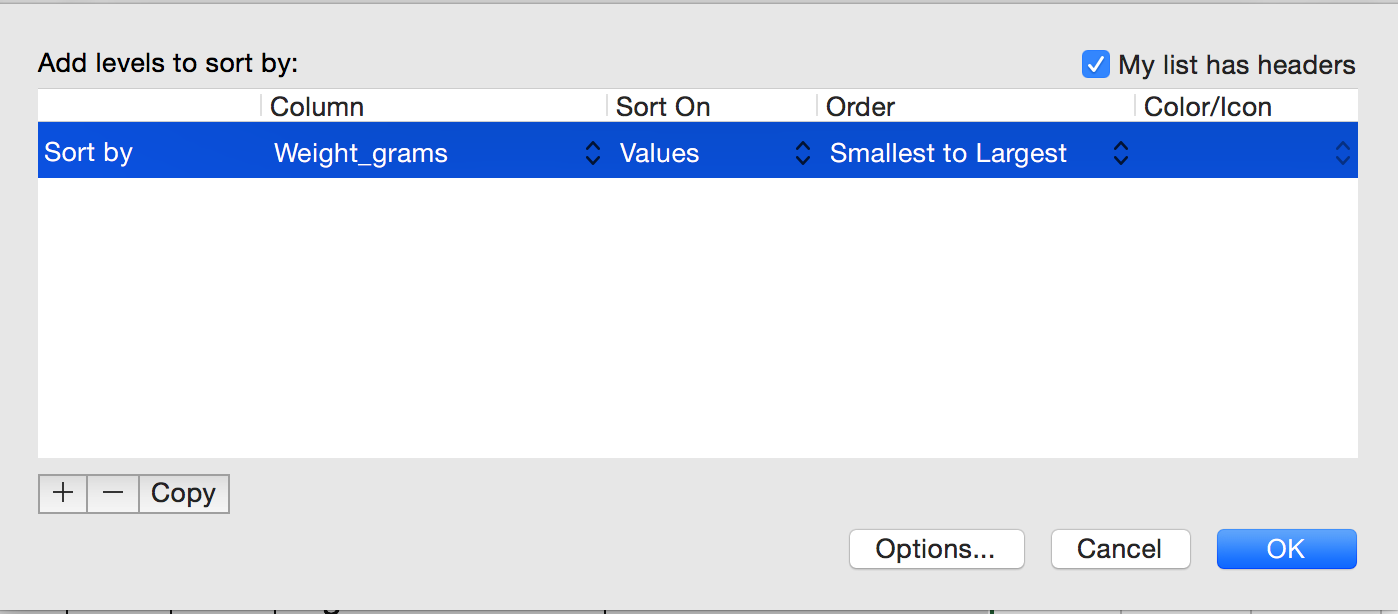

Exercise

Challenge: pulling month, day and year out of dates

- Let’s create a tab called

datesin our data spreadsheet and copy the ‘plot 3’ table from the2014tab (that contains the problematic dates).- Let’s extract month, day and year from the dates in the

Date collectedcolumn into new columns. For this we can use the following built-in Excel functions:

YEAR()

MONTH()

DAY()(Make sure the new columns are formatted as a number and not as a date.)

You can see that even though we expected the year to be 2014, the year is actually 2015. What happened here is that the field assistant who collected the data for year 2014 initially forgot to include their data for ‘plot 3’ in this dataset. They came back in 2015 to add the missing data into the dataset and entered the dates for ‘plot 3’ without the year. Excel automatically interpreted the year as 2015 - the year the data was entered into the spreadsheet and not the year the data was collected. Thereby, the spreadsheet program introduced an error in the dataset without the field assistant realising.

Solution

Exercise

Challenge: pulling hour, minute and second out of the current time

Current time and date are best retrieved using the functions

NOW(), which returns the current date and time, andTODAY(), which returns the current date. The results will be formatted according to your computer’s settings.1) Extract the year, month and day from the current date and time string returned by the

NOW()function.

2) Calculate the current time usingNOW()-TODAY().

3) Extract the hour, minute and second from the current time using functionsHOUR(),MINUTE()andSECOND().

4) PressF9to force the spreadsheet to recalculate theNOW()function, and check that it has been updated.Solution

1) To get the year, type

=YEAR(NOW())into any cell in your spreadsheet. To get the month, type=MONTH(NOW()). To get the day, type=DAY(NOW()).

2) Typing=NOW()-TODAY()will result in a decimal value that is not easily human parsable to a clock-based time. You will need to use the strategies in the third part of this challenge to convert this decimal value to readable time.

3) To extract the hour, type=HOUR(NOW()-TODAY())and similarly for minute and second.

Preferred date format

It is much safer to store dates with YEAR, MONTH, DAY in separate columns or as YEAR and DAY-OF-YEAR in separate columns.

Note: Excel is unable to parse dates from before 1899-12-31, and will thus leave these untouched. If you’re mixing historic data from before and after this date, Excel will translate only the post-1900 dates into its internal format, thus resulting in mixed data. If you’re working with historic data, be extremely careful with your dates!

Excel also entertains a second date system, the 1904 date system, as the default in Excel for Macintosh. This system will assign a different serial number than the 1900 date system. Because of this, dates must be checked for accuracy when exporting data from Excel (look for dates that are ~4 years off).

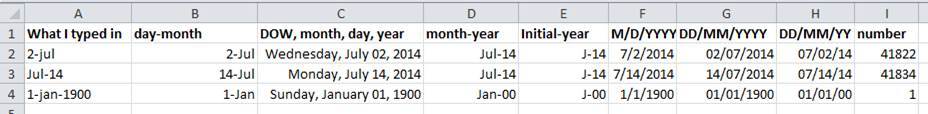

Date formats in spreadsheets

Spreadsheet programs have numerous “useful features” which allow them to handle dates in a variety of ways.

But these “features” often allow ambiguity to creep into your data. Ideally, data should be as unambiguous as possible.

Dates stored as integers

The first thing you need to know is that Excel stores dates as numbers - see the last column in the above figure. Essentially, it counts the days from a default of December 31, 1899, and thus stores July 2, 2014 as the serial number 41822.

(But wait. That’s the default on my version of Excel. We’ll get into how this can introduce problems down the line later in this lesson. )

This serial number thing can actually be useful in some circumstances. By using the above functions we can easily add days, months or years to a given date. Say you had a sampling plan where you needed to sample every thirty seven days. In another cell, you could type:

=B2+37

And it would return

8-Aug

because it understands the date as a number 41822, and 41822 + 37 = 41859

which Excel interprets as August 8, 2014. It retains the format (for the most

part) of the cell that is being operated upon, (unless you did some sort of

formatting to the cell before, and then all bets are off). Month and year

rollovers are internally tracked and applied.

Note Adding years and months and days is slightly trickier because we need to make sure that we are adding the amount to the correct entity.

- First we extract the single entities (day, month or year)

- We can then add values to do that

- Finally the complete date string is reconstructed using the

DATE()function.

As for dates, times are handled in a similar way; seconds can be directly added but to add hour and minutes we need to make sure that we are adding the quantities to the correct entities.

Which brings us to the many different ways Excel provides in how it displays dates. If you refer to the figure above, you’ll see that there are many ways that ambiguity creeps into your data depending on the format you chose when you enter your data, and if you’re not fully aware of which format you’re using, you can end up actually entering your data in a way that Excel will badly misinterpret and you will end up with errors in your data that will be extremely difficult to track down and troubleshoot.

Exercise

What happens to the dates in the

datestab of our workbook if we save this sheet in Excel (incsvformat) and then open the file in a plain text editor (like TextEdit or Notepad)? What happens to the dates if we then open thecsvfile in Excel?Solution

- Click to the

datestab of the workbook and double-click on any of the values in theDate collectedcolumn. Notice that the dates display with the year 2015.- Select

File -> Save Asin Excel and in the drop down menu for file format selectCSV UTF-8 (Comma delimited) (.csv). ClickSave.- You will see a pop-up that says “This workbook cannot be saved in the selected file format because it contains multiple sheets.” Choose

Save Active Sheet.- Navigate to the file in your finder application. Right click and select

Open With. Choose a plain text editor application and view the file. Notice that the dates display as month/day without any year information.- Now right click on the file again and open with Excel. Notice that the dates display with the current year, not 2015.

As you can see, exporting data from Excel and then importing it back into Excel fundamentally changed the data once again!

Note

You will notice that when exporting into a text-based format (such as CSV), Excel will export its internal date integer instead of a useful value (that is, the dates will be represented as integer numbers). This can potentially lead to problems if you use other software to manipulate the file.

Advantages of Alternative Date Formatting

Storing dates as YEAR, MONTH, DAY

Storing dates in YEAR, MONTH, DAY format helps remove this ambiguity. Let’s look at this issue a bit closer.

For instance this is a spreadsheet representing insect counts that were taken every few days over the summer, and things went something like this:

If Excel was to be believed, this person had been collecting bugs in the future. Now, we have no doubt this person is highly capable, but I believe time travel was beyond even their grasp.

Entering dates in one cell is helpful but due to the fact that the spreadsheet programs may interpret and save the data in different ways (doing that somewhat behind the scenes), there is a better practice.

In dealing with dates in spreadsheets, separate date data into separate fields (day, month, year), which will eliminate any chance of ambiguity.

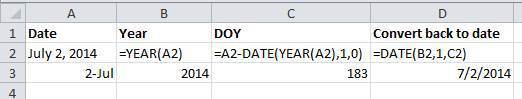

Storing dates as YEAR, DAY-OF-YEAR

There is also another option. You can also store dates as year and day of year (DOY). Why? Because depending on your question, this might be what’s useful to you, and there is practically no possibility for ambiguity creeping in.

Statistical models often incorporate year as a factor, or a categorical variable, rather than a numeric variable, to account for year-to-year variation, and DOY can be used to measure the passage of time within a year.

So, can you convert all your dates into DOY format? Well, in Excel, here’s a useful guide:

Storing dates as a single string

Another alternative could be to convert the date string

into a single string using the YYYYMMDDhhmmss format.

For example the date March 24, 2015 17:25:35 would

become 20150324172535, where:

YYYY: the full year, i.e. 2015

MM: the month, i.e. 03

DD: the day of month, i.e. 24

hh: hour of day, i.e. 17

mm: minutes, i.e. 25

ss: seconds, i.e. 35

Such strings will be correctly sorted in ascending or descending order, and by knowing the format they can then be correctly processed by the receiving software.

Key Points

Treating dates as multiple pieces of data rather than one makes them easier to handle.

Quality control

Overview

Teaching: 20 min

Exercises: 0 minQuestions

How can we carry out basic quality control and quality assurance in spreadsheets?

Objectives

Apply quality control techniques to identify errors in spreadsheets and limit incorrect data entry.

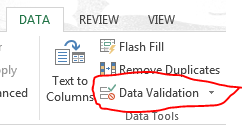

When you have a well-structured data table, you can use several simple techniques within your spreadsheet to ensure the data you enter is free of errors. These approaches include techniques that are implemented prior to entering data (quality assurance) and techniques that are used after entering data to check for errors (quality control).

Quality Assurance

Quality assurance stops bad data from ever being entered by checking to see if values are valid during data entry. For example, if research is being conducted at sites A, B, and C, then the value V (which is right next to B on the keyboard) should never be entered. Likewise if one of the kinds of data being collected is a count, only integers greater than or equal to zero should be allowed.

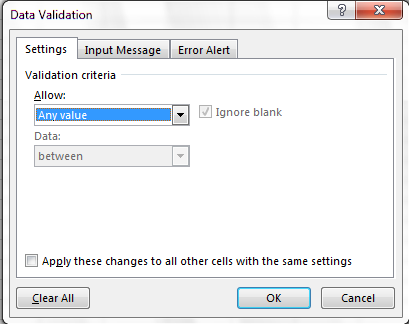

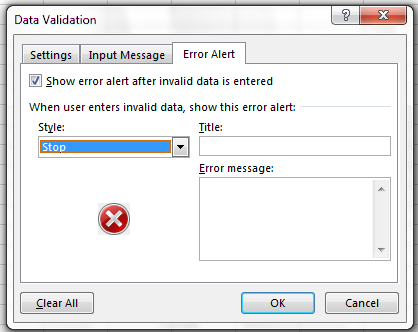

To control the kind of data entered into a spreadsheet we use Data Validation (Excel) or Validity (Libre Office Calc), to set the values that can be entered in each data column.

1. Select the cells or column you want to validate

2. On the Data tab select Data Validation

3. In the Allow box select the kind of data that should be in the

column. Options include whole numbers, decimals, lists of items, dates, and

other values.

4. After selecting an item enter any additional details. For example, if you’ve

chosen a list of values, enter a comma-delimited list of allowable

values in the Source box.

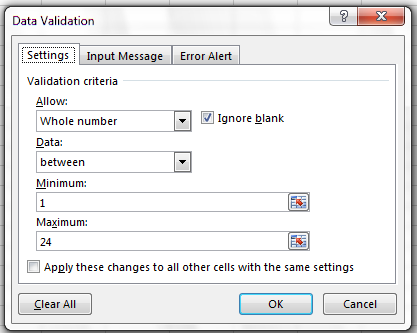

Let’s try this out by setting the plot column in our spreadsheet to only allow plot values that are integers between 1 and 24.

- Select the

plot_idcolumn - On the

Datatab selectData Validation - In the

Allowbox selectWhole number - Set the minimum and maximum values to 1 and 24.

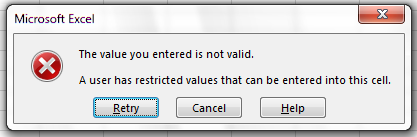

Now let’s try entering a new value in the plot column that isn’t a valid plot. The spreadsheet stops us from entering the wrong value and asks us if we would like to try again.

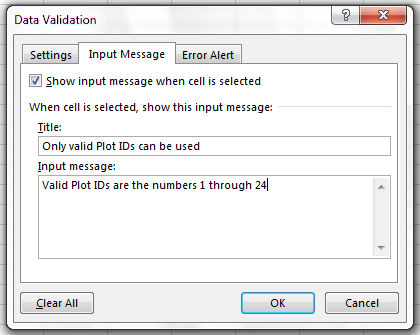

You can also customize the resulting message to be more informative by entering

your own message in the Input Message tab

or allow invalid data to result in a warning rather than an error by modifying the Style

option on the Error Alert tab.

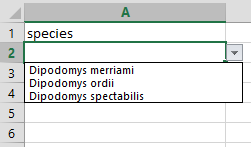

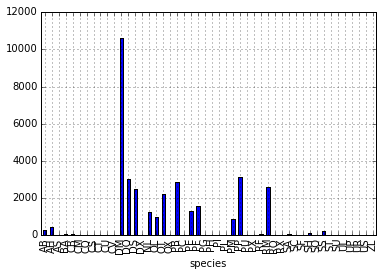

Quality assurance can make data entry easier as well as more robust. For example, if you use a list of options to restrict data entry, the spreadsheet will provide you with a drop-downlist of the available items. So, instead of trying to remember how to spell Dipodomys spectabilis, you can select the right option from the list.

Quality Control

Tip: Before doing any quality control operations, save your original file with the formulas and a name indicating it is the original data. Create a separate file with appropriate naming and versioning, and ensure your data is stored as values and not as formulas. Because formulas refer to other cells, and you may be moving cells around, you may compromise the integrity of your data if you do not take this step!

readMe (README) files: As you start manipulating your data files, create a readMe document / text file to keep track of your files and document your manipulations so that they may be easily understood and replicated, either by your future self or by an independent researcher. Your readMe file should document all of the files in your data set (including documentation), describe their content and format, and lay out the organizing principles of folders and subfolders. For each of the separate files listed, it is a good idea to document the manipulations or analyses that were carried out on those data. Cornell University’s Research Data Management Service Group provides detailed guidelines for how to write a good readMe file, along with an adaptable template.

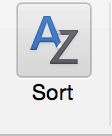

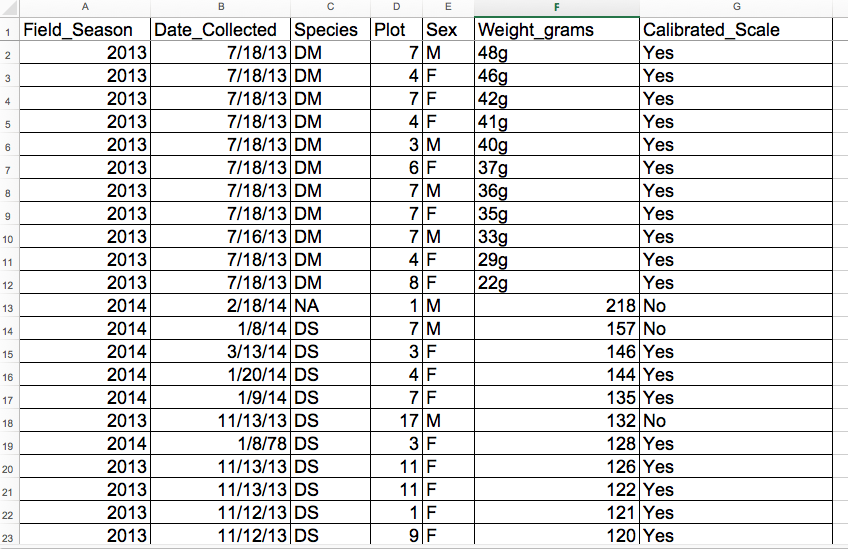

Sorting

Bad values often sort to the bottom or top of the column. For example, if your data should be numeric, then alphabetical and null data will group at the ends of the sorted data. Sort your data by each field, one at a time. Scan through each column, but pay the most attention to the top and the bottom of a column. If your dataset is well-structured and does not contain formulas, sorting should never affect the integrity of your dataset.

Remember to expand your sort in order to prevent data corruption. Expanding your sort ensures that the all the data in one row move together instead of only sorting a single column in isolation. Sorting by only a single column will scramble your data - a single row will no longer represent an individual observation.

Exercise

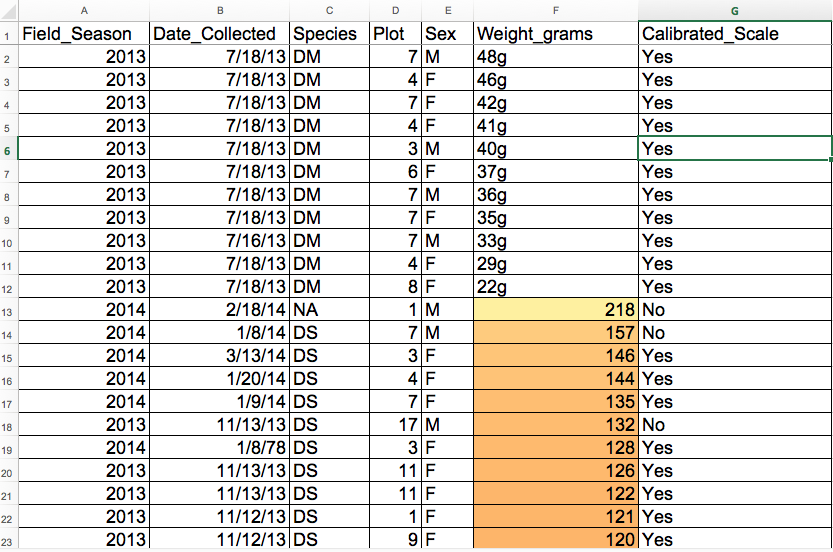

We’ve combined all of the tables from the messy data into a single table in a single tab. Download this semi-cleaned data file to your computer: survey_sorting_exercise

Once downloaded, sort the

Weight_gramscolumn in your spreadsheet program fromLargest to Smallest.What do you notice?

Solution

Click the Sort button on the data tab in Excel. A pop-up will appear. Make sure you select

Expand the selection.

The following window will display, choose the column you want to sort as well as the sort order.

Note how the odd values sort to the top and bottom of the tabular data. The cells containing no data values sort to the bottom of the tabular data, while the cells where the letter “g” was included can be found towards the top. This is a powerful way to check your data for outliers and odd values.

Conditional formatting

Conditional formatting basically can do something like color code your values by some criteria or lowest to highest. This makes it easy to scan your data for outliers.

Conditional formatting should be used with caution, but it can be a great way to flag inconsistent values when entering data.

Exercise

- Make sure the Weight_grams column is highlighted.

- In the main Excel menu bar, click

Home>Conditional Formatting...choose a formatting rule.- Apply any

2-Color Scaleformatting rule.- Now we can scan through and different colors will stand out. Do you notice any strange values?

Solution

Cells that contain non-numerical values are not colored. This includes both the cells where the letter “g” was included and the empty cells.

It is nice to be able to do these scans in spreadsheets, but we also can do these checks in a programming language like R, or in OpenRefine or SQL.

Key Points

Always copy your original spreadsheet file and work with a copy so you don’t affect the raw data.

Use data validation to prevent accidentally entering invalid data.

Use sorting to check for invalid data.

Use conditional formatting (cautiously) to check for invalid data.

Exporting data

Overview

Teaching: 10 min

Exercises: 0 minQuestions

How can we export data from spreadsheets in a way that is useful for downstream applications?

Objectives

Store spreadsheet data in universal file formats.

Export data from a spreadsheet to a CSV file.

Storing the data you’re going to work with for your analyses in Excel

default file format (*.xls or *.xlsx - depending on the Excel

version) isn’t a good idea. Why?

-

Because it is a proprietary format, and it is possible that in the future, technology won’t exist (or will become sufficiently rare) to make it inconvenient, if not impossible, to open the file.

-

Other spreadsheet software may not be able to open files saved in a proprietary Excel format.

-

Different versions of Excel may handle data differently, leading to inconsistencies.

-

Finally, more journals and grant agencies are requiring you to deposit your data in a data repository, and most of them don’t accept Excel format. It needs to be in one of the formats discussed below.

-

The above points also apply to other formats such as open data formats used by LibreOffice / Open Office. These formats are not static and do not get parsed the same way by different software packages.

As an example of inconsistencies in data storage, do you remember how we talked about how Excel stores dates earlier? It turns out that there are multiple defaults for different versions of the software, and you can switch between them all. So, say you’re compiling Excel-stored data from multiple sources. There are dates in each file - Excel interprets them as their own internally consistent serial numbers. When you combine the data, Excel will take the serial number from the place you’re importing it from, and interpret it using the rule set for the version of Excel you’re using. Essentially, you could be adding errors to your data, and it wouldn’t necessarily be flagged by any data cleaning methods if your ranges overlap.

Storing data in a universal, open, and static format will help deal with this problem. Try tab-delimited (tab separated values or TSV) or comma-delimited (comma separated values or CSV). CSV files are plain text files where the columns are separated by commas, hence ‘comma separated values’ or CSV. The advantage of a CSV file over an Excel/SPSS/etc. file is that we can open and read a CSV file using just about any software, including plain text editors like TextEdit or NotePad. Data in a CSV file can also be easily imported into other formats and environments, such as SQLite and R. We’re not tied to a certain version of a certain expensive program when we work with CSV files, so it’s a good format to work with for maximum portability and endurance. Most spreadsheet programs can save to delimited text formats like CSV easily, although they may give you a warning during the file export.

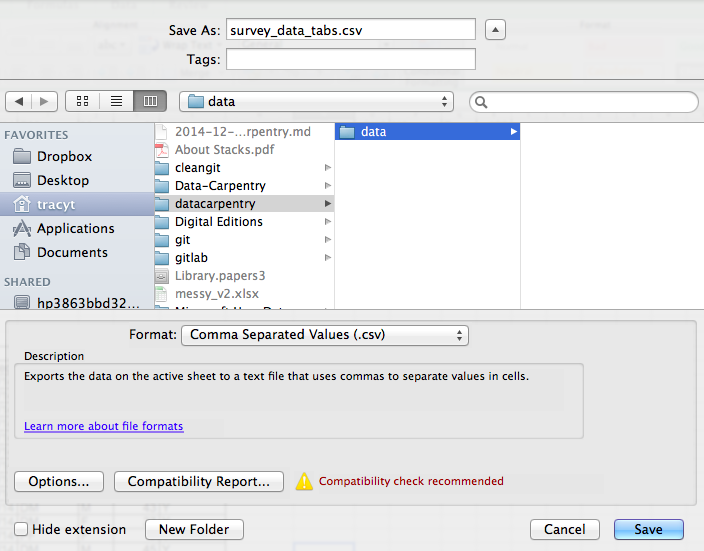

To save a file you have opened in Excel in CSV format:

- From the top menu select ‘File’ and ‘Save as’.

- In the ‘Format’ field, from the list, select ‘Comma Separated Values’ (

*.csv). - Double check the file name and the location where you want to save it and hit ‘Save’.

An important note for backwards compatibility: you can open CSV files in Excel!

A Note on Cross-platform Operability

By default, most coding and statistical environments expect UNIX-style line endings (ASCII LF character) as representing line breaks. However, Windows uses an alternate line ending signifier (ASCII CR LF characters) by default for legacy compatibility with Teletype-based systems.

As such, when exporting to CSV using Excel, your data in text format will look like this:

data1,data2

1,2 4,5

When opening your CSV file in Excel again, it will parse it as follows:

However, if you open your CSV file on a different system that does not parse the CR character it will interpret your CSV file differently:

Your data in text format then look like this:

data1

data2

1

2

…

You will then see a weird character or possibly the string CR or \r:

thus causing terrible things to happen to your data. For example, 2\r is not a valid integer, and thus will throw an error (if you’re lucky) when you attempt to operate on it in R or Python. Note that this happens on Excel for OSX as well as Windows, due to legacy Windows compatibility.

There are a handful of solutions for enforcing uniform UNIX-style line endings on your exported CSV files:

- When exporting from Excel, save as a “Windows comma separated (.csv)” file

-

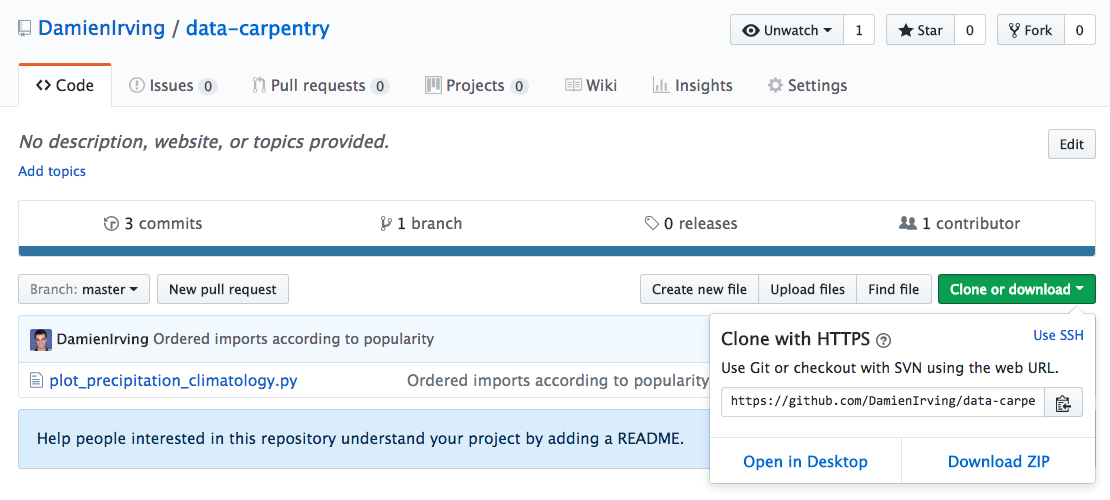

If you store your data file under version control using Git, edit the

.git/configfile in your repository to automatically translate\r\nline endings into\n. Add the following to the file (see the detailed tutorial):[filter "cr"] clean = LC_CTYPE=C awk '{printf(\"%s\\n\", $0)}' | LC_CTYPE=C tr '\\r' '\\n' smudge = tr '\\n' '\\r'`

.gitattributes that contains the line:

*.csv filter=cr

- Use dos2unix (available on OSX, *nix, and Cygwin) on local files to standardize line endings.

A note on R and .xlsx

There are R packages that can read .xls or .xlsx files (as well as

Google spreadsheets). It is even possible to access different

worksheets in the .xlsx documents.

But

- some of these only work on Windows

- this equates to replacing a (simple but manual) export to

csvwith additional complexity/dependencies in the data analysis R code - data formatting best practice still apply

- Is there really a good reason why

csv(or similar) is not adequate?

Caveats on commas

In some datasets, the data values themselves may include commas (,). In that case, the software which you use (including Excel) will most likely incorrectly display the data in columns. This is because the commas which are a part of the data values will be interpreted as delimiters.

If you are working with data that contains commas, you likely will need to use another delimiter when working in a spreadsheet. In this case, consider using tabs as your delimiter and working with TSV files. TSV files can be exported from spreadsheet programs in the same way as CSV files. For more of a discussion on data formats and potential issues with commas within datasets see the discussion page.

Key Points

Data stored in common spreadsheet formats will often not be read correctly into data analysis software, introducing errors into your data.

Exporting data from spreadsheets to formats like CSV or TSV puts it in a format that can be used consistently by most programs.

Before we start

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is Python and why should I learn it?

Objectives

Present motivations for using Python.

Organize files and directories for a set of analyses as a Python project, and understand the purpose of the working directory.

How to work with Jupyter Notebook and Spyder.

Know where to find help.

Demonstrate how to provide sufficient information for troubleshooting with the Python user community.

What is Python?

Python is a general purpose programming language that supports rapid development of data analytics applications. The word “Python” is used to refer to both, the programming language and the tool that executes the scripts written in Python language.

Its main advantages are:

- Free

- Open-source

- Available on all major platforms (macOS, Linux, Windows)

- Supported by Python Software Foundation

- Supports multiple programming paradigms

- Has large community

- Rich ecosystem of third-party packages

So, why do you need Python for data analysis?

-

Easy to learn: Python is easier to learn than other programming languages. This is important because lower barriers mean it is easier for new members of the community to get up to speed.

-

Reproducibility: Reproducibility is the ability to obtain the same results using the same dataset(s) and analysis.

Data analysis written as a Python script can be reproduced on any platform. Moreover, if you collect more or correct existing data, you can quickly re-run your analysis!

An increasing number of journals and funding agencies expect analyses to be reproducible, so knowing Python will give you an edge with these requirements.

-

Versatility: Python is a versatile language that integrates with many existing applications to enable something completely amazing. For example, one can use Python to generate manuscripts, so that if you need to update your data, analysis procedure, or change something else, you can quickly regenerate all the figures and your manuscript will be updated automatically.

Python can read text files, connect to databases, and many other data formats, on your computer or on the web.

-

Interdisciplinary and extensible: Python provides a framework that allows anyone to combine approaches from different research (but not only) disciplines to best suit your analysis needs.

-

Python has a large and welcoming community: Thousands of people use Python daily. Many of them are willing to help you through mailing lists and websites, such as Stack Overflow and Anaconda community portal.

-

Free and Open-Source Software (FOSS)… and Cross-Platform: We know we have already said that but it is worth repeating.

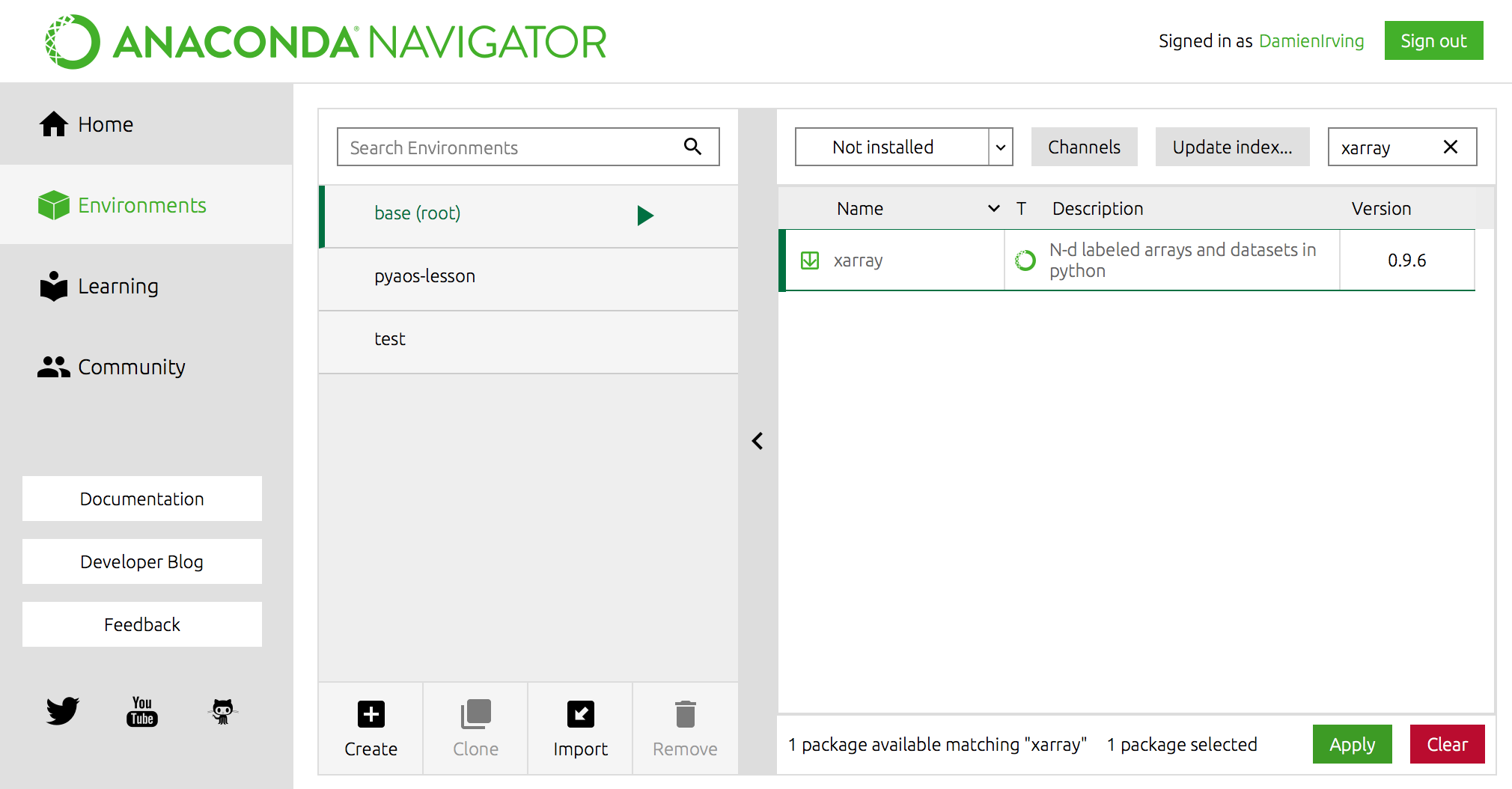

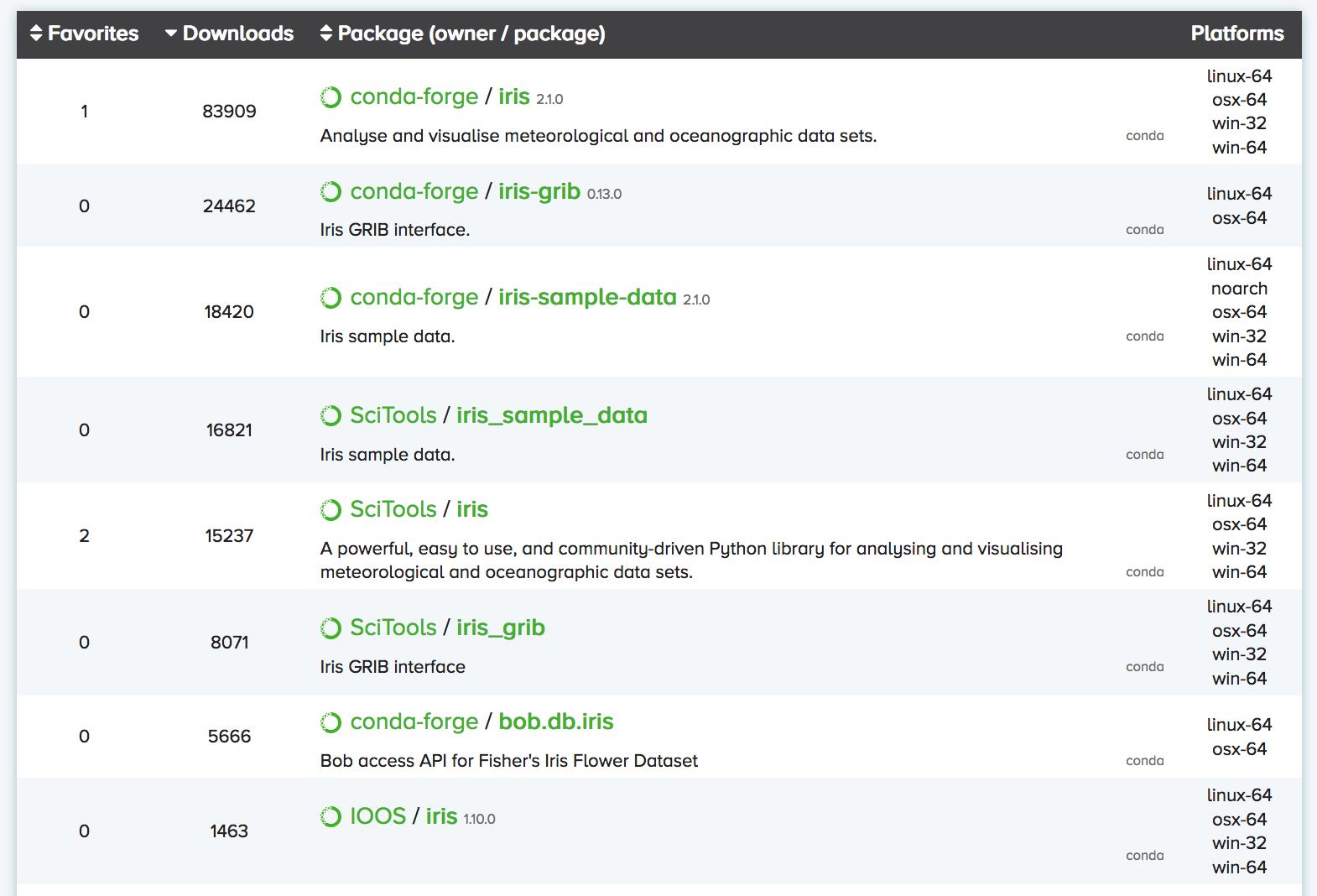

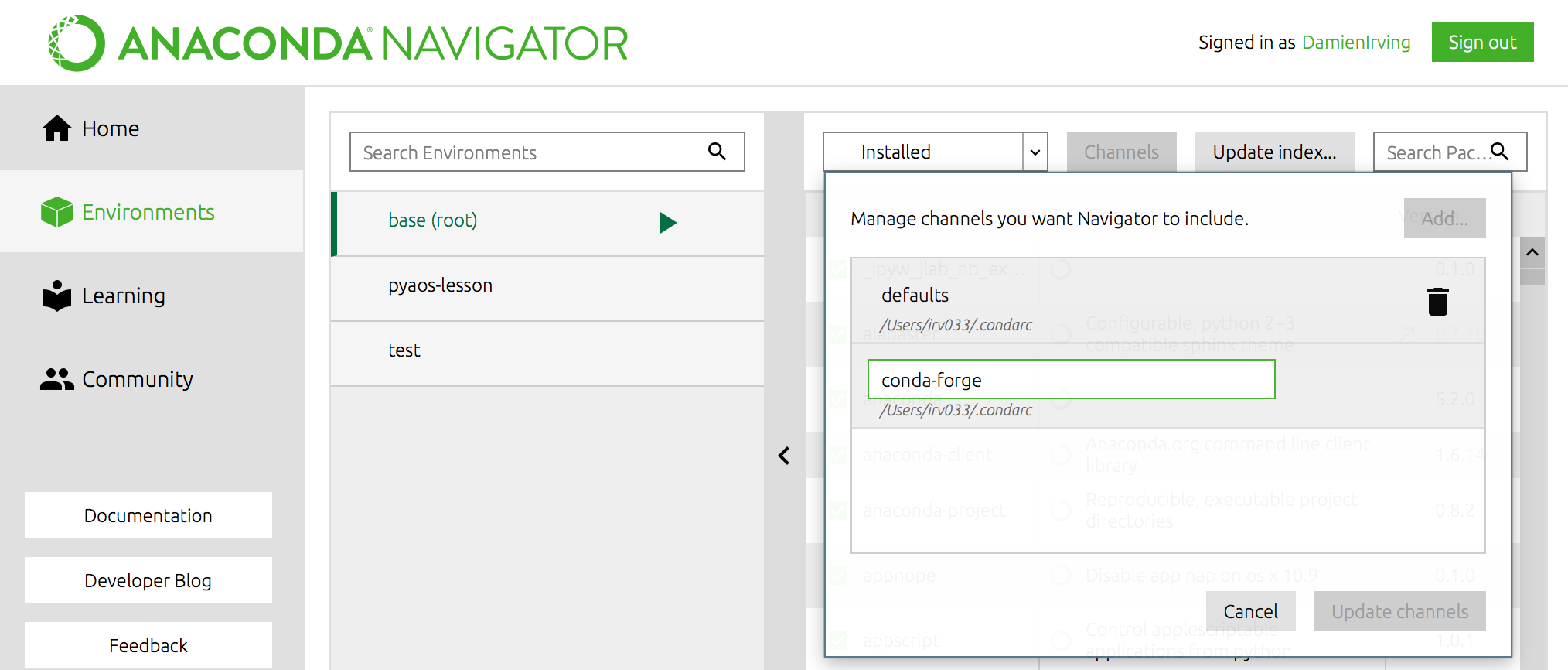

Knowing your way around Anaconda

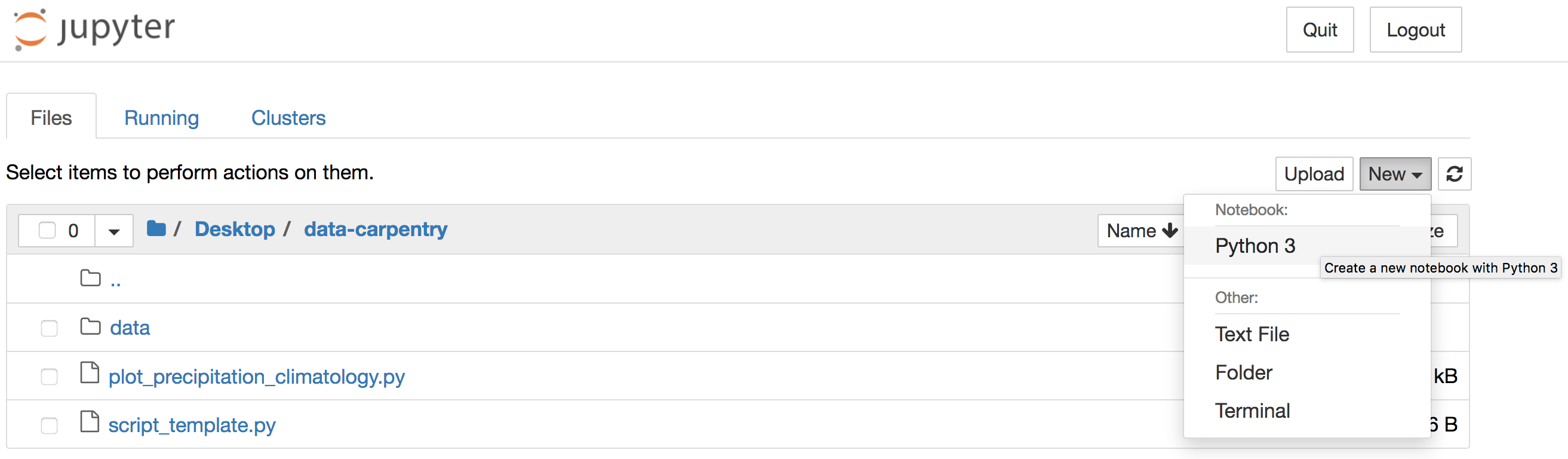

Anaconda distribution of Python includes a lot of its popular packages, such as the IPython console, Jupyter Notebook, and Spyder IDE. Have a quick look around the Anaconda Navigator. You can launch programs from the Navigator or use the command line.

The Jupyter Notebook is an open-source web application that allows you to create and share documents that allow one to create documents that combine code, graphs, and narrative text. Spyder is an Integrated Development Environment that allows one to write Python scripts and interact with the Python software from within a single interface.

Anaconda also comes with a package manager called conda, which makes it easy to install and update additional packages.

Research Project: Best Practices

It is a good idea to keep a set of related data, analyses, and text in a single folder. All scripts and text files within this folder can then use relative paths to the data files. Working this way makes it a lot easier to move around your project and share it with others.

Organizing your working directory

Using a consistent folder structure across your projects will help you keep things organized, and will also make it easy to find/file things in the future. This can be especially helpful when you have multiple projects. In general, you may wish to create separate directories for your scripts, data, and documents.

-

data/: Use this folder to store your raw data. For the sake of transparency and provenance, you should always keep a copy of your raw data. If you need to cleanup data, do it programmatically (i.e. with scripts) and make sure to separate cleaned up data from the raw data. For example, you can store raw data in files./data/raw/and clean data in./data/clean/. -

documents/: Use this folder to store outlines, drafts, and other text. -

scripts/: Use this folder to store your (Python) scripts for data cleaning, analysis, and plotting that you use in this particular project.

You may need to create additional directories depending on your project needs, but these should form

the backbone of your project’s directory. For this workshop, we will need a data/ folder to store

our raw data, and we will later create a data_output/ folder when we learn how to export data as

CSV files.

What is Programming and Coding?

Programming is the process of writing “programs” that a computer can execute and produce some (useful) output. Programming is a multi-step process that involves the following steps:

- Identifying the aspects of the real-world problem that can be solved computationally

- Identifying (the best) computational solution

- Implementing the solution in a specific computer language

- Testing, validating, and adjusting implemented solution.

While “Programming” refers to all of the above steps, “Coding” refers to step 3 only: “Implementing the solution in a specific computer language”. It’s important to note that “the best” computational solution must consider factors beyond the computer. Who is using the program, what resources/funds does your team have for this project, and the available timeline all shape and mold what “best” may be.

If you are working with Jupyter notebook:

You can type Python code into a code cell and then execute the code by pressing

Shift+Return.

Output will be printed directly under the input cell.

You can recognise a code cell by the In[ ]: at the beginning of the cell and output by Out[ ]:.

Pressing the + button in the menu bar will add a new cell.

All your commands as well as any output will be saved with the notebook.

If you are working with Spyder:

You can either use the console or use script files (plain text files that contain your code). The console pane (in Spyder, the bottom right panel) is the place where commands written in the Python language can be typed and executed immediately by the computer. It is also where the results will be shown. You can execute commands directly in the console by pressing Return, but they will be “lost” when you close the session. Spyder uses the IPython console by default.

Since we want our code and workflow to be reproducible, it is better to type the commands in the script editor, and save them as a script. This way, there is a complete record of what we did, and anyone (including our future selves!) has an easier time reproducing the results on their computer.

Spyder allows you to execute commands directly from the script editor by using the run buttons on top. To run the entire script click Run file or press F5, to run the current line click Run selection or current line or press F9, other run buttons allow to run script cells or go into debug mode. When using F9, the command on the current line in the script (indicated by the cursor) or all of the commands in the currently selected text will be sent to the console and executed.

At some point in your analysis you may want to check the content of a variable or the structure of an object, without necessarily keeping a record of it in your script. You can type these commands and execute them directly in the console. Spyder provides the Ctrl+Shift+E and Ctrl+Shift+I shortcuts to allow you to jump between the script and the console panes.

If Python is ready to accept commands, the IPython console shows an In [..]: prompt with the

current console line number in []. If it receives a command (by typing, copy-pasting or sent from

the script editor), Python will execute it, display the results in the Out [..]: cell, and come

back with a new In [..]: prompt waiting for new commands.

If Python is still waiting for you to enter more data because it isn’t complete yet, the console

will show a ...: prompt. It means that you haven’t finished entering a complete command. This can

be because you have not typed a closing parenthesis (), ], or }) or quotation mark. When this

happens, and you thought you finished typing your command, click inside the console window and press

Esc; this will cancel the incomplete command and return you to the In [..]: prompt.

How to learn more after the workshop?

The material we cover during this workshop will give you an initial taste of how you can use Python to analyze data for your own research. However, you will need to learn more to do advanced operations such as cleaning your dataset, using statistical methods, or creating beautiful graphics. The best way to become proficient and efficient at python, as with any other tool, is to use it to address your actual research questions. As a beginner, it can feel daunting to have to write a script from scratch, and given that many people make their code available online, modifying existing code to suit your purpose might make it easier for you to get started.

Seeking help

- check under the Help menu

- type

help() - type

?objectorhelp(object)to get information about an object - Python documentation

- Pandas documentation

Finally, a generic Google or internet search “Python task” will often either send you to the appropriate module documentation or a helpful forum where someone else has already asked your question.

I am stuck… I get an error message that I don’t understand. Start by googling the error message. However, this doesn’t always work very well, because often, package developers rely on the error catching provided by Python. You end up with general error messages that might not be very helpful to diagnose a problem (e.g. “subscript out of bounds”). If the message is very generic, you might also include the name of the function or package you’re using in your query.

However, you should check Stack Overflow. Search using the [python] tag. Most questions have already

been answered, but the challenge is to use the right words in the search to find the answers:

https://stackoverflow.com/questions/tagged/python?tab=Votes

Asking for help

The key to receiving help from someone is for them to rapidly grasp your problem. You should make it as easy as possible to pinpoint where the issue might be.

Try to use the correct words to describe your problem. For instance, a package is not the same thing as a library. Most people will understand what you meant, but others have really strong feelings about the difference in meaning. The key point is that it can make things confusing for people trying to help you. Be as precise as possible when describing your problem.

If possible, try to reduce what doesn’t work to a simple reproducible example. If you can reproduce the problem using a very small data frame instead of your 50,000 rows and 10,000 columns one, provide the small one with the description of your problem. When appropriate, try to generalize what you are doing so even people who are not in your field can understand the question. For instance, instead of using a subset of your real dataset, create a small (3 columns, 5 rows) generic one.

Where to ask for help?

- The person sitting next to you during the workshop. Don’t hesitate to talk to your neighbor during the workshop, compare your answers, and ask for help. You might also be interested in organizing regular meetings following the workshop to keep learning from each other.

- Your friendly colleagues: if you know someone with more experience than you, they might be able and willing to help you.

- Stack Overflow: if your question hasn’t been answered before and is well crafted, chances are you will get an answer in less than 5 min. Remember to follow their guidelines on how to ask a good question.

- Python mailing lists

More resources

Key Points

Python is an open source and platform independent programming language.

Jupyter Notebook and the Spyder IDE are great tools to code in and interact with Python. With the large Python community it is easy to find help on the internet.

Short Introduction to Programming in Python

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What is Python?

Why should I learn Python?

Objectives

Describe the advantages of using programming vs. completing repetitive tasks by hand.

Define the following data types in Python: strings, integers, and floats.

Perform mathematical operations in Python using basic operators.

Define the following as it relates to Python: lists, tuples, and dictionaries.

Let`s get started

Open up Jupyter Notebook. Go to the folder where your data is stored, this should be Dekstop/data-carpentry. To run a cell in Jupyter, put a value on the last line of the cell and run the cell (shift+enter), the value will be pringted below the cell:

3.145

Interpreter

Python is an interpreted language which can be used in two ways:

- “Interactively”: when you use it as an “advanced calculator” executing one command at a time.

2 + 2

4

print("Hello World")

Hello World

- “Scripting” Mode: executing a series of “commands” saved in text file, usually with a

.pyextension after the name of your file:

%run "counter_example_script.py"

Hello world

I count to 5

0

1

2

3

4

5

my counting is done

Introduction to Python built-in data types

Strings, integers, and floats

One of the most basic things we can do in Python is assign values to variables:

text = "Data Carpentry" # An example of a string

number = 42 # An example of an integer

pi_value = 3.1415 # An example of a float

Here we’ve assigned data to the variables text, number and pi_value,

using the assignment operator =. To review the value of a variable, we

can type the name of the variable into the interpreter and press Return:

text

"Data Carpentry"

Everything in Python has a type. To get the type of something, we can pass it

to the built-in function type:

type(text)

<class 'str'>

type(number)

<class 'int'>

type(pi_value)

<class 'float'>

The variable text is of type str, short for “string”. Strings hold

sequences of characters, which can be letters, numbers, punctuation

or more exotic forms of text (even emoji!).

We can also see the value of something using another built-in function, print:

print(text)

Data Carpentry

print(number)

42

This may seem redundant, but in fact it’s the only way to display output in a script:

example.py

# A Python script file

# Comments in Python start with #

# The next line assigns the string "Data Carpentry" to the variable "text".

text = "Data Carpentry"

# The next line does nothing!

text

# The next line uses the print function to print out the value we assigned to "text"

print(text)

Running the script

$ python example.py

Data Carpentry

Notice that “Data Carpentry” is printed only once.

Tip: print and type are built-in functions in Python. Later in this

lesson, we will introduce methods and user-defined functions. The Python

documentation is excellent for reference on the differences between them.

Operators

We can perform mathematical calculations in Python using the basic operators

+, -, /, *, %:

2 + 2 # Addition

4

6 * 7 # Multiplication

42

2 ** 16 # Power

65536

13 % 5 # Modulo

3

We can also use comparison and logic operators:

<, >, ==, !=, <=, >= and statements of identity such as

and, or, not. The data type returned by this is

called a boolean.

3 > 4

False

True and True

True

True or False

True

True and False

False

Sequences: Lists and Tuples

Lists

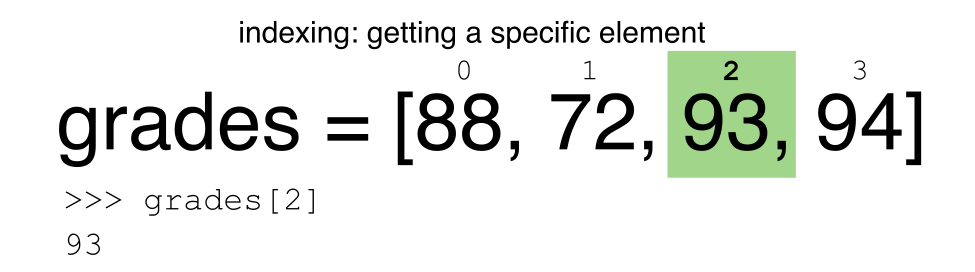

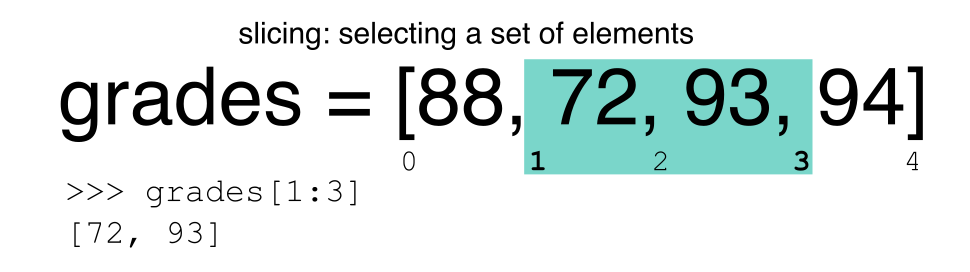

Lists are a common data structure to hold an ordered sequence of elements. Each element can be accessed by an index. Note that Python indexes start with 0 instead of 1:

numbers = [1, 2, 3]

numbers[0]

1

A for loop can be used to access the elements in a list or other Python data

structure one at a time:

for num in numbers:

print(num)

1

2

3

Indentation is very important in Python. Note that the second line in the

example above is indented. Just like three chevrons >>> indicate an

interactive prompt in Python, the three dots ... are Python’s prompt for

multiple lines. This is Python’s way of marking a block of code. [Note: you

do not type >>> or ....]

To add elements to the end of a list, we can use the append method. Methods

are a way to interact with an object (a list, for example). We can invoke a

method using the dot . followed by the method name and a list of arguments

in parentheses. Let’s look at an example using append:

numbers.append(4)

print(numbers)

[1, 2, 3, 4]

To find out what methods are available for an

object, we can use the built-in help command:

help(numbers)

Help on list object:

class list(object)

| list() -> new empty list

| list(iterable) -> new list initialized from iterable's items

...

Tuples

A tuple is similar to a list in that it’s an ordered sequence of elements.

However, tuples can not be changed once created (they are “immutable”). Tuples

are created by placing comma-separated values inside parentheses ().

# Tuples use parentheses

a_tuple = (1, 2, 3)

another_tuple = ('blue', 'green', 'red')

# Note: lists use square brackets

a_list = [1, 2, 3]

Tuples vs. Lists

- What happens when you execute

a_list[1] = 5?- What happens when you execute

a_tuple[2] = 5?- What does

type(a_tuple)tell you abouta_tuple?

Dictionaries

A dictionary is a container that holds pairs of objects - keys and values.

translation = {'one': 'first', 'two': 'second'}

translation['one']

'first'

Dictionaries work a lot like lists - except that you index them with keys. You can think about a key as a name or unique identifier for the value it corresponds to.

rev = {'first': 'one', 'second': 'two'}

rev['first']

'one'

To add an item to the dictionary we assign a value to a new key:

rev = {'first': 'one', 'second': 'two'}

rev['third'] = 'three'

rev

{'first': 'one', 'second': 'two', 'third': 'three'}

Using for loops with dictionaries is a little more complicated. We can do

this in two ways:

for key, value in rev.items():

print(key, '->', value)

'first' -> one

'second' -> two

'third' -> three

or

for key in rev.keys():

print(key, '->', rev[key])

'first' -> one

'second' -> two

'third' -> three

Changing dictionaries

- First, print the value of the

revdictionary to the screen.- Reassign the value that corresponds to the key

secondso that it no longer reads “two” but instead2.- Print the value of

revto the screen again to see if the value has changed.

Functions

Defining a section of code as a function in Python is done using the def

keyword. For example a function that takes two arguments and returns their sum

can be defined as:

def add_function(a, b):

result = a + b

return result

z = add_function(20, 22)

print(z)

42

Key Points

Python is an interpreted language which can be used interactively (executing one command at a time) or in scripting mode (executing a series of commands saved in file).

One can assign a value to a variable in Python. Those variables can be of several types, such as string, integer, floating point and complex numbers.

Lists and tuples are similar in that they are ordered lists of elements; they differ in that a tuple is immutable (cannot be changed).

Dictionaries are data structures that provide mappings between keys and values.

Starting With Data

Overview

Teaching: 30 min

Exercises: 30 minQuestions

How can I import data in Python?

What is Pandas?

Why should I use Pandas to work with data?

Objectives

Navigate the workshop directory and download a dataset.

Explain what a library is and what libraries are used for.

Describe what the Python Data Analysis Library (Pandas) is.

Load the Python Data Analysis Library (Pandas).

Use

read_csvto read tabular data into Python.Describe what a DataFrame is in Python.

Access and summarize data stored in a DataFrame.

Define indexing as it relates to data structures.

Perform basic mathematical operations and summary statistics on data in a Pandas DataFrame.

Create simple plots.

Working With Pandas DataFrames in Python

We can automate the process of performing data manipulations in Python. It’s efficient to spend time building the code to perform these tasks because once it’s built, we can use it over and over on different datasets that use a similar format. This makes our methods easily reproducible. We can also easily share our code with colleagues and they can replicate the same analysis.

Starting in the same spot

To help the lesson run smoothly, let’s ensure everyone is in the same directory. This should help us avoid path and file name issues. At this time please navigate to the workshop directory. If you are working in IPython Notebook be sure that you start your notebook in the workshop directory.

A quick aside that there are Python libraries like OS Library that can work with our directory structure, however, that is not our focus today.

Our Data

For this lesson, we will be using the Portal Teaching data, a subset of the data from Ernst et al Long-term monitoring and experimental manipulation of a Chihuahuan Desert ecosystem near Portal, Arizona, USA.

We will be using files from the Portal Project Teaching Database.

This section will use the surveys.csv file that can be downloaded here:

https://ndownloader.figshare.com/files/2292172

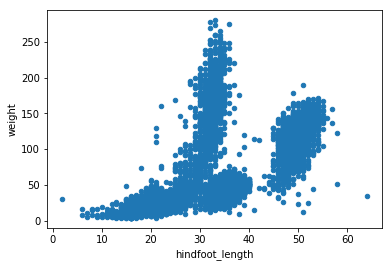

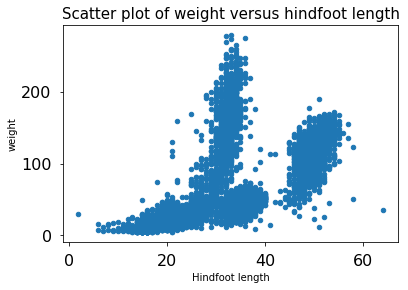

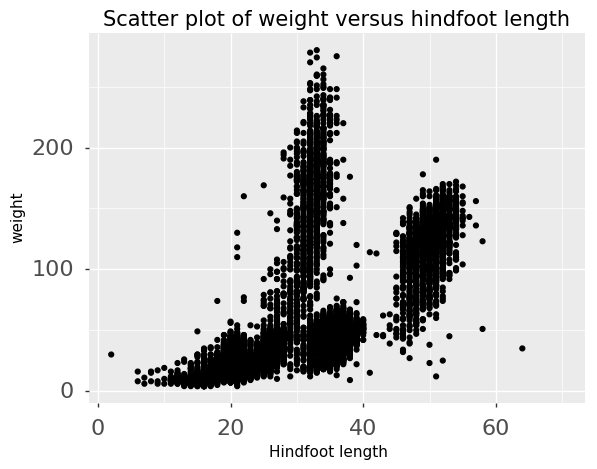

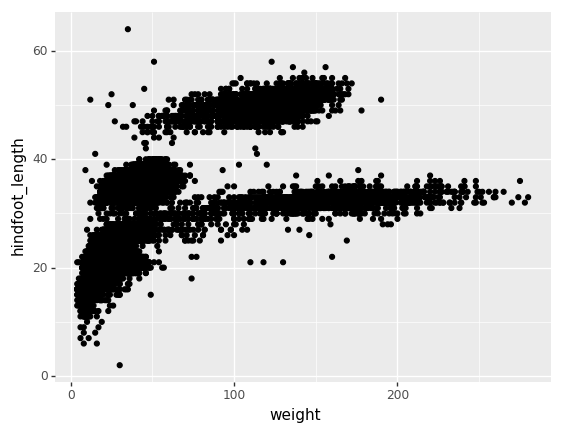

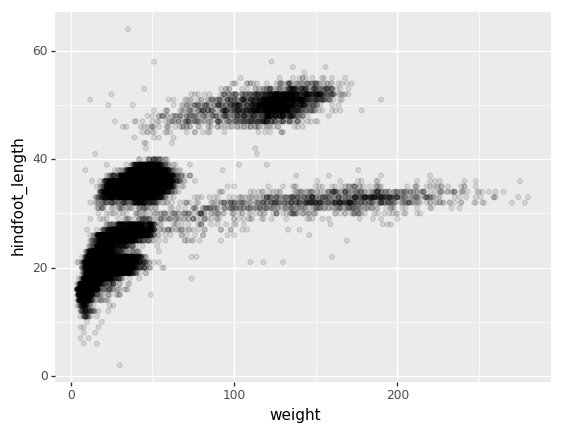

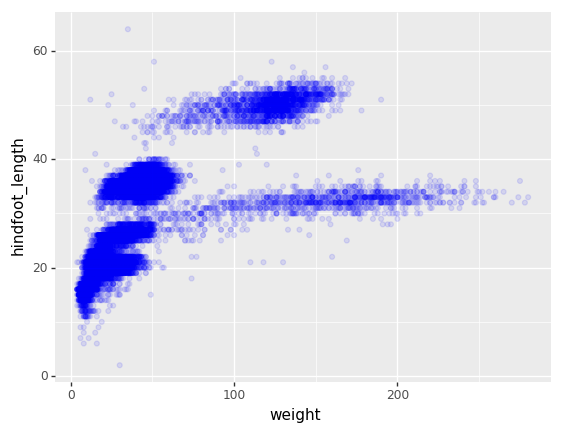

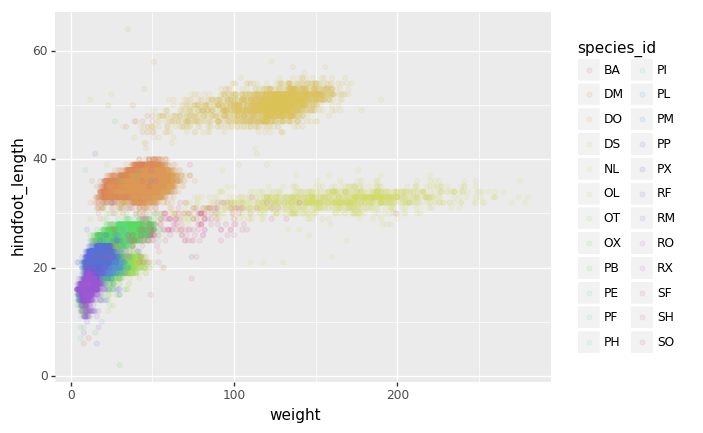

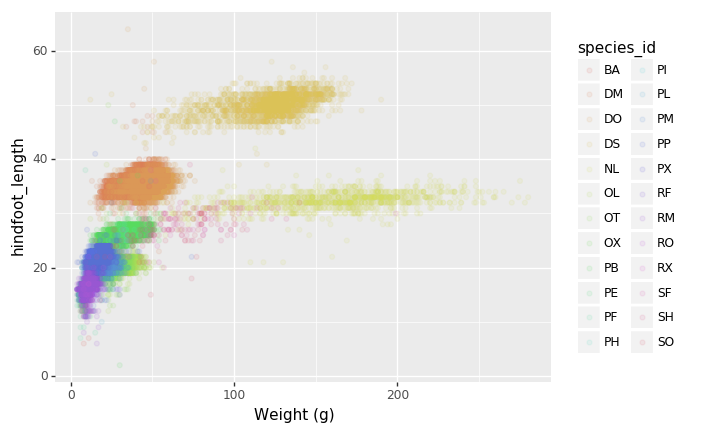

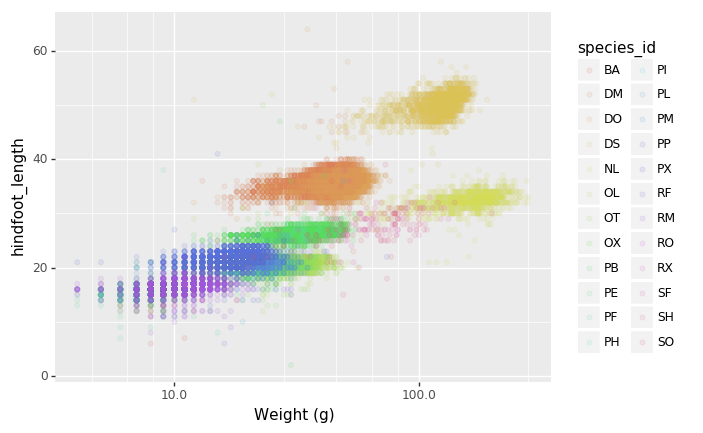

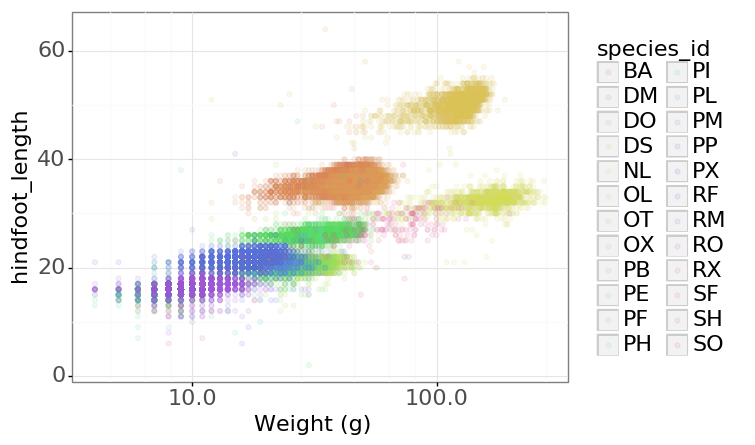

We are studying the species and weight of animals caught in sites in our study

area. The dataset is stored as a .csv file: each row holds information for a

single animal, and the columns represent:

| Column | Description |

|---|---|

| record_id | Unique id for the observation |

| month | month of observation |

| day | day of observation |

| year | year of observation |

| plot_id | ID of a particular site |

| species_id | 2-letter code |

| sex | sex of animal (“M”, “F”) |

| hindfoot_length | length of the hindfoot in mm |

| weight | weight of the animal in grams |

The first few rows of our first file look like this:

record_id,month,day,year,plot_id,species_id,sex,hindfoot_length,weight

1,7,16,1977,2,NL,M,32,

2,7,16,1977,3,NL,M,33,

3,7,16,1977,2,DM,F,37,

4,7,16,1977,7,DM,M,36,

5,7,16,1977,3,DM,M,35,

6,7,16,1977,1,PF,M,14,

7,7,16,1977,2,PE,F,,

8,7,16,1977,1,DM,M,37,

9,7,16,1977,1,DM,F,34,

About Libraries

A library in Python contains a set of tools (called functions) that perform tasks on our data. Importing a library is like getting a piece of lab equipment out of a storage locker and setting it up on the bench for use in a project. Once a library is set up, it can be used or called to perform the task(s) it was built to do.

Pandas in Python

One of the best options for working with tabular data in Python is to use the Python Data Analysis Library (a.k.a. Pandas). The Pandas library provides data structures, produces high quality plots with matplotlib and integrates nicely with other libraries that use NumPy (which is another Python library) arrays.

Python doesn’t load all of the libraries available to it by default. We have to

add an import statement to our code in order to use library functions. To import

a library, we use the syntax import libraryName. If we want to give the

library a nickname to shorten the command, we can add as nickNameHere. An

example of importing the pandas library using the common nickname pd is below.

import pandas as pd

Each time we call a function that’s in a library, we use the syntax

LibraryName.FunctionName. Adding the library name with a . before the

function name tells Python where to find the function. In the example above, we

have imported Pandas as pd. This means we don’t have to type out pandas each

time we call a Pandas function.

Reading CSV Data Using Pandas

We will begin by locating and reading our survey data which are in CSV format. CSV stands for

Comma-Separated Values and is a common way to store formatted data. Other symbols may also be used, so

you might see tab-separated, colon-separated or space separated files. It is quite easy to replace

one separator with another, to match your application. The first line in the file often has headers

to explain what is in each column. CSV (and other separators) make it easy to share data, and can be

imported and exported from many applications, including Microsoft Excel. For more details on CSV

files, see the Data Organisation in Spreadsheets lesson.

We can use Pandas’ read_csv function to pull the file directly into a DataFrame.

So What’s a DataFrame?

Pandas provides an object called DataFrame. Dataframes represent tabular data. They are a 2-dimensional data structure. They include columns, each of which is a Series with a name, and all columns share the same index (An index refers to the position of an element in the data structure.). We import a csv table into a data frame using the read_csv function.

A DataFrame is a 2-dimensional data structure that can store data of different

types (including characters, integers, floating point values, factors and more)

in columns. It is similar to a spreadsheet or an SQL table or the data.frame in

R. A DataFrame always has an index (0-based). An index refers to the position of

an element in the data structure.

# Note that pd.read_csv is used because we imported pandas as pd

pd.read_csv("data/surveys.csv")

The above command yields the output below:

record_id month day year plot_id species_id sex hindfoot_length weight

0 1 7 16 1977 2 NL M 32 NaN

1 2 7 16 1977 3 NL M 33 NaN

2 3 7 16 1977 2 DM F 37 NaN

3 4 7 16 1977 7 DM M 36 NaN

4 5 7 16 1977 3 DM M 35 NaN

...

35544 35545 12 31 2002 15 AH NaN NaN NaN

35545 35546 12 31 2002 15 AH NaN NaN NaN

35546 35547 12 31 2002 10 RM F 15 14

35547 35548 12 31 2002 7 DO M 36 51

35548 35549 12 31 2002 5 NaN NaN NaN NaN

[35549 rows x 9 columns]

We can see that there were 35,549 rows parsed. Each row has 9

columns. The first column is the index of the DataFrame. The index is used to

identify the position of the data, but it is not an actual column of the DataFrame.